Fix broken links to images, make all image links absolute.

Fixes #8064, fixes #7685. (after docs republish) Change: 154614227

This commit is contained in:

parent

2d264f38fd

commit

1d679a0476

@ -209,7 +209,7 @@ The input tensors are all required to have size 1 in the first dimension.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'x' is [[1, 4]]

|

||||

# 'y' is [[2, 5]]

|

||||

# 'z' is [[3, 6]]

|

||||

@ -277,7 +277,7 @@ Etc.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'x' is [1, 4]

|

||||

# 'y' is [2, 5]

|

||||

# 'z' is [3, 6]

|

||||

@ -432,7 +432,7 @@ Computes offsets of concat inputs within its output.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'x' is [2, 2, 7]

|

||||

# 'y' is [2, 3, 7]

|

||||

# 'z' is [2, 5, 7]

|

||||

@ -670,7 +670,7 @@ rank 2k with dimensions [D1,..., Dk, D1,..., Dk] where:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'diagonal' is [1, 2, 3, 4]

|

||||

tf.diag(diagonal) ==> [[1, 0, 0, 0]

|

||||

[0, 2, 0, 0]

|

||||

@ -722,7 +722,7 @@ tensor of rank `k` with dimensions `[D1,..., Dk]` where:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'input' is [[1, 0, 0, 0]

|

||||

[0, 2, 0, 0]

|

||||

[0, 0, 3, 0]

|

||||

@ -768,7 +768,7 @@ tensor of rank `k+1` with dimensions [I, J, K, ..., N, N]` where:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'diagonal' is [[1, 2, 3, 4], [5, 6, 7, 8]]

|

||||

|

||||

and diagonal.shape = (2, 4)

|

||||

@ -880,7 +880,7 @@ The input must be at least a matrix.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'input' is [[[1, 0, 0, 0]

|

||||

[0, 2, 0, 0]

|

||||

[0, 0, 3, 0]

|

||||

@ -927,7 +927,7 @@ The indicator function

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# if 'input' is [[ 0, 1, 2, 3]

|

||||

[-1, 0, 1, 2]

|

||||

[-2, -1, 0, 1]

|

||||

@ -946,7 +946,7 @@ tf.matrix_band_part(input, 2, 1) ==> [[ 0, 1, 0, 0]

|

||||

|

||||

Useful special cases:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

tf.matrix_band_part(input, 0, -1) ==> Upper triangular part.

|

||||

tf.matrix_band_part(input, -1, 0) ==> Lower triangular part.

|

||||

tf.matrix_band_part(input, 0, 0) ==> Diagonal.

|

||||

@ -998,7 +998,7 @@ of `tensor` must equal the number of elements in `dims`. In other words:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor 't' is [[[[ 0, 1, 2, 3],

|

||||

# [ 4, 5, 6, 7],

|

||||

# [ 8, 9, 10, 11]],

|

||||

@ -1074,7 +1074,7 @@ once, a InvalidArgument error is raised.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor 't' is [[[[ 0, 1, 2, 3],

|

||||

# [ 4, 5, 6, 7],

|

||||

# [ 8, 9, 10, 11]],

|

||||

@ -1245,7 +1245,7 @@ This operation creates a tensor of shape `dims` and fills it with `value`.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# Output tensor has shape [2, 3].

|

||||

fill([2, 3], 9) ==> [[9, 9, 9]

|

||||

[9, 9, 9]]

|

||||

@ -1354,7 +1354,7 @@ out-of-bound indices result in safe but unspecified behavior, which may include

|

||||

raising an error.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../../images/Gather.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/Gather.png" alt>

|

||||

</div>

|

||||

)doc");

|

||||

|

||||

@ -1610,7 +1610,7 @@ implied by `shape` must be the same as the number of elements in `tensor`.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor 't' is [1, 2, 3, 4, 5, 6, 7, 8, 9]

|

||||

# tensor 't' has shape [9]

|

||||

reshape(t, [3, 3]) ==> [[1, 2, 3],

|

||||

@ -1697,7 +1697,7 @@ The values must include 0. There can be no duplicate values or negative values.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor `x` is [3, 4, 0, 2, 1]

|

||||

invert_permutation(x) ==> [2, 4, 3, 0, 1]

|

||||

```

|

||||

@ -1802,7 +1802,7 @@ in the unique output `y`. In other words:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor 'x' is [1, 1, 2, 4, 4, 4, 7, 8, 8]

|

||||

y, idx = unique(x)

|

||||

y ==> [1, 2, 4, 7, 8]

|

||||

@ -1842,7 +1842,7 @@ contains the count of each element of `y` in `x`. In other words:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# tensor 'x' is [1, 1, 2, 4, 4, 4, 7, 8, 8]

|

||||

y, idx, count = unique_with_counts(x)

|

||||

y ==> [1, 2, 4, 7, 8]

|

||||

@ -1887,7 +1887,7 @@ This operation returns a 1-D integer tensor representing the shape of `input`.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[[1, 1, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]

|

||||

shape(t) ==> [2, 2, 3]

|

||||

```

|

||||

@ -1968,7 +1968,7 @@ slice `i`, with the first `seq_lengths[i]` slices along dimension

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# Given this:

|

||||

batch_dim = 0

|

||||

seq_dim = 1

|

||||

@ -1990,7 +1990,7 @@ output[3, 2:, :, ...] = input[3, 2:, :, ...]

|

||||

|

||||

In contrast, if:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# Given this:

|

||||

batch_dim = 2

|

||||

seq_dim = 0

|

||||

@ -2031,7 +2031,7 @@ This operation returns an integer representing the rank of `input`.

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[[1, 1, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]

|

||||

# shape of tensor 't' is [2, 2, 3]

|

||||

rank(t) ==> 3

|

||||

@ -2057,7 +2057,7 @@ This operation returns an integer representing the number of elements in

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[[1, 1,, 1], [2, 2, 2]], [[3, 3, 3], [4, 4, 4]]]]

|

||||

size(t) ==> 12

|

||||

```

|

||||

@ -2290,7 +2290,7 @@ encoding is best understand by considering a non-trivial example. In

|

||||

particular,

|

||||

`foo[1, 2:4, None, ..., :-3:-1, :]` will be encoded as

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

begin = [1, 2, x, x, 0, x] # x denotes don't care (usually 0)

|

||||

end = [2, 4, x, x, -3, x]

|

||||

strides = [1, 1, x, x, -1, 1]

|

||||

@ -2512,7 +2512,7 @@ the output tensor can vary depending on how many true values there are in

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 'input' tensor is [[True, False]

|

||||

# [True, False]]

|

||||

# 'input' has two true values, so output has two coordinates.

|

||||

@ -2616,7 +2616,7 @@ The padded size of each dimension D of the output is:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[1, 1], [2, 2]]

|

||||

# 'paddings' is [[1, 1], [2, 2]]

|

||||

# rank of 't' is 2

|

||||

@ -2655,7 +2655,7 @@ The padded size of each dimension D of the output is:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[1, 2, 3], [4, 5, 6]].

|

||||

# 'paddings' is [[1, 1]], [2, 2]].

|

||||

# 'mode' is SYMMETRIC.

|

||||

@ -2751,7 +2751,7 @@ The folded size of each dimension D of the output is:

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is [[1, 2, 3], [4, 5, 6], [7, 8, 9]].

|

||||

# 'paddings' is [[0, 1]], [0, 1]].

|

||||

# 'mode' is SYMMETRIC.

|

||||

@ -2927,7 +2927,7 @@ which will make the shape `[1, height, width, channels]`.

|

||||

|

||||

Other examples:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is a tensor of shape [2]

|

||||

shape(expand_dims(t, 0)) ==> [1, 2]

|

||||

shape(expand_dims(t, 1)) ==> [2, 1]

|

||||

@ -3029,14 +3029,14 @@ dimensions, you can remove specific size 1 dimensions by specifying

|

||||

|

||||

For example:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is a tensor of shape [1, 2, 1, 3, 1, 1]

|

||||

shape(squeeze(t)) ==> [2, 3]

|

||||

```

|

||||

|

||||

Or, to remove specific size 1 dimensions:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

# 't' is a tensor of shape [1, 2, 1, 3, 1, 1]

|

||||

shape(squeeze(t, [2, 4])) ==> [1, 2, 3, 1]

|

||||

```

|

||||

@ -3079,14 +3079,14 @@ position of each `out` element in `x`. In other words:

|

||||

|

||||

For example, given this input:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [1, 2, 3, 4, 5, 6]

|

||||

y = [1, 3, 5]

|

||||

```

|

||||

|

||||

This operation would return:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

out ==> [2, 4, 6]

|

||||

idx ==> [1, 3, 5]

|

||||

```

|

||||

@ -3345,34 +3345,34 @@ Some examples:

|

||||

(1) For the following input of shape `[1, 2, 2, 1]`, `block_shape = [2, 2]`, and

|

||||

`paddings = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2]], [[3], [4]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[4, 1, 1, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1]]], [[[2]]], [[[3]]], [[[4]]]]

|

||||

```

|

||||

|

||||

(2) For the following input of shape `[1, 2, 2, 3]`, `block_shape = [2, 2]`, and

|

||||

`paddings = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[4, 1, 1, 3]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[1, 2, 3]], [[4, 5, 6]], [[7, 8, 9]], [[10, 11, 12]]]

|

||||

```

|

||||

|

||||

(3) For the following input of shape `[1, 4, 4, 1]`, `block_shape = [2, 2]`, and

|

||||

`paddings = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]],

|

||||

[[9], [10], [11], [12]],

|

||||

@ -3381,7 +3381,7 @@ x = [[[[1], [2], [3], [4]],

|

||||

|

||||

The output tensor has shape `[4, 2, 2, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]], [[9], [11]]],

|

||||

[[[2], [4]], [[10], [12]]],

|

||||

[[[5], [7]], [[13], [15]]],

|

||||

@ -3391,7 +3391,7 @@ x = [[[[1], [3]], [[9], [11]]],

|

||||

(4) For the following input of shape `[2, 2, 4, 1]`, block_shape = `[2, 2]`, and

|

||||

paddings = `[[0, 0], [2, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]]],

|

||||

[[[9], [10], [11], [12]],

|

||||

@ -3400,7 +3400,7 @@ x = [[[[1], [2], [3], [4]],

|

||||

|

||||

The output tensor has shape `[8, 1, 3, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[0], [1], [3]]], [[[0], [9], [11]]],

|

||||

[[[0], [2], [4]]], [[[0], [10], [12]]],

|

||||

[[[0], [5], [7]]], [[[0], [13], [15]]],

|

||||

@ -3474,32 +3474,32 @@ Some examples:

|

||||

|

||||

(1) For the following input of shape `[1, 2, 2, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2]], [[3], [4]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[4, 1, 1, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1]]], [[[2]]], [[[3]]], [[[4]]]]

|

||||

```

|

||||

|

||||

(2) For the following input of shape `[1, 2, 2, 3]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[4, 1, 1, 3]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[1, 2, 3]], [[4, 5, 6]], [[7, 8, 9]], [[10, 11, 12]]]

|

||||

```

|

||||

|

||||

(3) For the following input of shape `[1, 4, 4, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]],

|

||||

[[9], [10], [11], [12]],

|

||||

@ -3508,7 +3508,7 @@ x = [[[[1], [2], [3], [4]],

|

||||

|

||||

The output tensor has shape `[4, 2, 2, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]], [[9], [11]]],

|

||||

[[[2], [4]], [[10], [12]]],

|

||||

[[[5], [7]], [[13], [15]]],

|

||||

@ -3517,7 +3517,7 @@ x = [[[[1], [3]], [[9], [11]]],

|

||||

|

||||

(4) For the following input of shape `[2, 2, 4, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]]],

|

||||

[[[9], [10], [11], [12]],

|

||||

@ -3526,7 +3526,7 @@ x = [[[[1], [2], [3], [4]],

|

||||

|

||||

The output tensor has shape `[8, 1, 2, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]]], [[[9], [11]]], [[[2], [4]]], [[[10], [12]]],

|

||||

[[[5], [7]]], [[[13], [15]]], [[[6], [8]]], [[[14], [16]]]]

|

||||

```

|

||||

@ -3612,26 +3612,26 @@ Some examples:

|

||||

(1) For the following input of shape `[4, 1, 1, 1]`, `block_shape = [2, 2]`, and

|

||||

`crops = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1]]], [[[2]]], [[[3]]], [[[4]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[1, 2, 2, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2]], [[3], [4]]]]

|

||||

```

|

||||

|

||||

(2) For the following input of shape `[4, 1, 1, 3]`, `block_shape = [2, 2]`, and

|

||||

`crops = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[1, 2, 3]], [[4, 5, 6]], [[7, 8, 9]], [[10, 11, 12]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[1, 2, 2, 3]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

```

|

||||

@ -3639,7 +3639,7 @@ x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

(3) For the following input of shape `[4, 2, 2, 1]`, `block_shape = [2, 2]`, and

|

||||

`crops = [[0, 0], [0, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]], [[9], [11]]],

|

||||

[[[2], [4]], [[10], [12]]],

|

||||

[[[5], [7]], [[13], [15]]],

|

||||

@ -3648,7 +3648,7 @@ x = [[[[1], [3]], [[9], [11]]],

|

||||

|

||||

The output tensor has shape `[1, 4, 4, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]],

|

||||

[[9], [10], [11], [12]],

|

||||

@ -3658,7 +3658,7 @@ x = [[[1], [2], [3], [4]],

|

||||

(4) For the following input of shape `[8, 1, 3, 1]`, `block_shape = [2, 2]`, and

|

||||

`crops = [[0, 0], [2, 0]]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[0], [1], [3]]], [[[0], [9], [11]]],

|

||||

[[[0], [2], [4]]], [[[0], [10], [12]]],

|

||||

[[[0], [5], [7]]], [[[0], [13], [15]]],

|

||||

@ -3667,7 +3667,7 @@ x = [[[[0], [1], [3]]], [[[0], [9], [11]]],

|

||||

|

||||

The output tensor has shape `[2, 2, 4, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]]],

|

||||

[[[9], [10], [11], [12]],

|

||||

@ -3732,32 +3732,32 @@ Some examples:

|

||||

|

||||

(1) For the following input of shape `[4, 1, 1, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1]]], [[[2]]], [[[3]]], [[[4]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[1, 2, 2, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2]], [[3], [4]]]]

|

||||

```

|

||||

|

||||

(2) For the following input of shape `[4, 1, 1, 3]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[1, 2, 3]], [[4, 5, 6]], [[7, 8, 9]], [[10, 11, 12]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[1, 2, 2, 3]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

```

|

||||

|

||||

(3) For the following input of shape `[4, 2, 2, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]], [[9], [11]]],

|

||||

[[[2], [4]], [[10], [12]]],

|

||||

[[[5], [7]], [[13], [15]]],

|

||||

@ -3766,7 +3766,7 @@ x = [[[[1], [3]], [[9], [11]]],

|

||||

|

||||

The output tensor has shape `[1, 4, 4, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[1], [2], [3], [4]],

|

||||

[[5], [6], [7], [8]],

|

||||

[[9], [10], [11], [12]],

|

||||

@ -3775,14 +3775,14 @@ x = [[[1], [2], [3], [4]],

|

||||

|

||||

(4) For the following input of shape `[8, 1, 2, 1]` and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]]], [[[9], [11]]], [[[2], [4]]], [[[10], [12]]],

|

||||

[[[5], [7]]], [[[13], [15]]], [[[6], [8]]], [[[14], [16]]]]

|

||||

```

|

||||

|

||||

The output tensor has shape `[2, 2, 4, 1]` and value:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [3]], [[5], [7]]],

|

||||

[[[2], [4]], [[10], [12]]],

|

||||

[[[5], [7]], [[13], [15]]],

|

||||

@ -3848,14 +3848,14 @@ purely convolutional models.

|

||||

|

||||

For example, given this input of shape `[1, 2, 2, 1]`, and block_size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2]],

|

||||

[[3], [4]]]]

|

||||

```

|

||||

|

||||

This operation will output a tensor of shape `[1, 1, 1, 4]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1, 2, 3, 4]]]]

|

||||

```

|

||||

|

||||

@ -3866,7 +3866,7 @@ The output element shape is `[1, 1, 4]`.

|

||||

|

||||

For an input tensor with larger depth, here of shape `[1, 2, 2, 3]`, e.g.

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

```

|

||||

@ -3874,13 +3874,13 @@ x = [[[[1, 2, 3], [4, 5, 6]],

|

||||

This operation, for block_size of 2, will return the following tensor of shape

|

||||

`[1, 1, 1, 12]`

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12]]]]

|

||||

```

|

||||

|

||||

Similarly, for the following input of shape `[1 4 4 1]`, and a block size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1], [2], [5], [6]],

|

||||

[[3], [4], [7], [8]],

|

||||

[[9], [10], [13], [14]],

|

||||

@ -3889,7 +3889,7 @@ x = [[[[1], [2], [5], [6]],

|

||||

|

||||

the operator will return the following tensor of shape `[1 2 2 4]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3, 4],

|

||||

[5, 6, 7, 8]],

|

||||

[[9, 10, 11, 12],

|

||||

@ -3958,14 +3958,14 @@ purely convolutional models.

|

||||

|

||||

For example, given this input of shape `[1, 1, 1, 4]`, and a block size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3, 4]]]]

|

||||

|

||||

```

|

||||

|

||||

This operation will output a tensor of shape `[1, 2, 2, 1]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1], [2]],

|

||||

[[3], [4]]]]

|

||||

```

|

||||

@ -3977,14 +3977,14 @@ The output element shape is `[2, 2, 1]`.

|

||||

|

||||

For an input tensor with larger depth, here of shape `[1, 1, 1, 12]`, e.g.

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12]]]]

|

||||

```

|

||||

|

||||

This operation, for block size of 2, will return the following tensor of shape

|

||||

`[1, 2, 2, 3]`

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

[[[[1, 2, 3], [4, 5, 6]],

|

||||

[[7, 8, 9], [10, 11, 12]]]]

|

||||

|

||||

@ -3992,7 +3992,7 @@ This operation, for block size of 2, will return the following tensor of shape

|

||||

|

||||

Similarly, for the following input of shape `[1 2 2 4]`, and a block size of 2:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[[[1, 2, 3, 4],

|

||||

[5, 6, 7, 8]],

|

||||

[[9, 10, 11, 12],

|

||||

@ -4001,7 +4001,7 @@ x = [[[[1, 2, 3, 4],

|

||||

|

||||

the operator will return the following tensor of shape `[1 4 4 1]`:

|

||||

|

||||

```prettyprint

|

||||

```

|

||||

x = [[ [1], [2], [5], [6]],

|

||||

[ [3], [4], [7], [8]],

|

||||

[ [9], [10], [13], [14]],

|

||||

@ -4775,7 +4775,7 @@ index. For example, say we want to insert 4 scattered elements in a rank-1

|

||||

tensor with 8 elements.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterNd1.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterNd1.png" alt>

|

||||

</div>

|

||||

|

||||

In Python, this scatter operation would look like this:

|

||||

@ -4798,7 +4798,7 @@ example, if we wanted to insert two slices in the first dimension of a

|

||||

rank-3 tensor with two matrices of new values.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterNd2.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterNd2.png" alt>

|

||||

</div>

|

||||

|

||||

In Python, this scatter operation would look like this:

|

||||

|

||||

@ -102,7 +102,7 @@ For example:

|

||||

```

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/DynamicPartition.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/DynamicPartition.png" alt>

|

||||

</div>

|

||||

|

||||

partitions: Any shape. Indices in the range `[0, num_partitions)`.

|

||||

@ -190,7 +190,7 @@ For example:

|

||||

```

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/DynamicStitch.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/DynamicStitch.png" alt>

|

||||

</div>

|

||||

)doc");

|

||||

|

||||

|

||||

@ -295,7 +295,7 @@ the same location, their contributions add.

|

||||

Requires `updates.shape = indices.shape + ref.shape[1:]`.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterAdd.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterAdd.png" alt>

|

||||

</div>

|

||||

|

||||

resource: Should be from a `Variable` node.

|

||||

|

||||

@ -288,7 +288,7 @@ for each value is undefined.

|

||||

Requires `updates.shape = indices.shape + ref.shape[1:]`.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterUpdate.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterUpdate.png" alt>

|

||||

</div>

|

||||

|

||||

ref: Should be from a `Variable` node.

|

||||

@ -332,7 +332,7 @@ the same location, their contributions add.

|

||||

Requires `updates.shape = indices.shape + ref.shape[1:]`.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterAdd.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterAdd.png" alt>

|

||||

</div>

|

||||

|

||||

ref: Should be from a `Variable` node.

|

||||

@ -376,7 +376,7 @@ the same location, their (negated) contributions add.

|

||||

Requires `updates.shape = indices.shape + ref.shape[1:]`.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ScatterSub.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ScatterSub.png" alt>

|

||||

</div>

|

||||

|

||||

ref: Should be from a `Variable` node.

|

||||

|

||||

@ -33,7 +33,7 @@ plt.plot(x, z)

|

||||

```

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/lorenz_attractor.png" alt>

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/lorenz_attractor.png" alt>

|

||||

</div>

|

||||

|

||||

## Ops

|

||||

|

||||

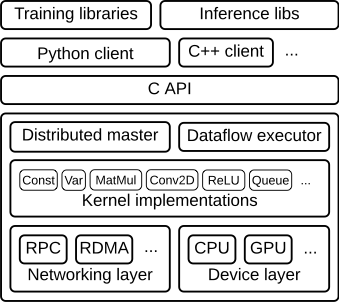

@ -25,7 +25,7 @@ The TensorFlow runtime is a cross-platform library. Figure 1 illustrates its

|

||||

general architecture. A C API separates user level code in different languages

|

||||

from the core runtime.

|

||||

|

||||

{: width="300"}

|

||||

{: width="300"}

|

||||

|

||||

**Figure 1**

|

||||

|

||||

@ -57,7 +57,7 @@ Other tasks send updates to these parameters as they work on optimizing the

|

||||

parameters. This particular division of labor between tasks is not required, but

|

||||

it is common for distributed training.

|

||||

|

||||

{: width="500"}

|

||||

{: width="500"}

|

||||

|

||||

**Figure 2**

|

||||

|

||||

@ -91,7 +91,7 @@ In Figure 3, the client has built a graph that applies weights (w) to a

|

||||

feature vector (x), adds a bias term (b) and saves the result in a variable

|

||||

(s).

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 3**

|

||||

|

||||

@ -114,7 +114,7 @@ a step, it applies standard optimizations such as common subexpression

|

||||

elimination and constant folding. It then coordinates execution of the

|

||||

optimized subgraphs across a set of tasks.

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 4**

|

||||

|

||||

@ -123,7 +123,7 @@ Figure 5 shows a possible partition of our example graph. The distributed

|

||||

master has grouped the model parameters in order to place them together on the

|

||||

parameter server.

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 5**

|

||||

|

||||

@ -132,14 +132,14 @@ Where graph edges are cut by the partition, the distributed master inserts

|

||||

send and receive nodes to pass information between the distributed tasks

|

||||

(Figure 6).

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 6**

|

||||

|

||||

|

||||

The distributed master then ships the graph pieces to the distributed tasks.

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 7**

|

||||

|

||||

@ -181,7 +181,7 @@ We also have preliminary support for NVIDIA's NCCL library for multi-GPU

|

||||

communication (see [`tf.contrib.nccl`](

|

||||

https://www.tensorflow.org/code/tensorflow/contrib/nccl/python/ops/nccl_ops.py)).

|

||||

|

||||

{: width="700"}

|

||||

{: width="700"}

|

||||

|

||||

**Figure 8**

|

||||

|

||||

|

||||

@ -72,7 +72,7 @@ for abalone:

|

||||

|

||||

The label to predict is number of rings, as a proxy for abalone age.

|

||||

|

||||

**[“Abalone

|

||||

**[“Abalone

|

||||

shell”](https://www.flickr.com/photos/thenickster/16641048623/) (by [Nicki Dugan

|

||||

Pogue](https://www.flickr.com/photos/thenickster/), CC BY-SA 2.0)**

|

||||

|

||||

|

||||

@ -21,7 +21,7 @@ interested in word embeddings,

|

||||

gives a good introduction.

|

||||

|

||||

<video autoplay loop style="max-width: 100%;">

|

||||

<source src="../images/embedding-mnist.mp4" type="video/mp4">

|

||||

<source src="https://www.tensorflow.org/images/embedding-mnist.mp4" type="video/mp4">

|

||||

Sorry, your browser doesn't support HTML5 video in MP4 format.

|

||||

</video>

|

||||

|

||||

@ -173,7 +173,7 @@ last data point in the bottom right:

|

||||

|

||||

Note in the example above that the last row doesn't have to be filled. For a

|

||||

concrete example of a sprite, see

|

||||

[this sprite image](../images/mnist_10k_sprite.png) of 10,000 MNIST digits

|

||||

[this sprite image](https://www.tensorflow.org/images/mnist_10k_sprite.png) of 10,000 MNIST digits

|

||||

(100x100).

|

||||

|

||||

Note: We currently support sprites up to 8192px X 8192px.

|

||||

@ -247,7 +247,7 @@ further analysis on their own with the "Isolate Points" button in the Inspector

|

||||

pane on the right hand side.

|

||||

|

||||

|

||||

|

||||

|

||||

*Selection of the nearest neighbors of “important” in a word embedding dataset.*

|

||||

|

||||

The combination of filtering with custom projection can be powerful. Below, we filtered

|

||||

@ -260,10 +260,10 @@ You can see that on the right side we have “ideas”, “science”, “perspe

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 30%;">

|

||||

<img src="../images/embedding-custom-controls.png" alt="Custom controls panel" title="Custom controls panel" />

|

||||

<img src="https://www.tensorflow.org/images/embedding-custom-controls.png" alt="Custom controls panel" title="Custom controls panel" />

|

||||

</td>

|

||||

<td style="width: 70%;">

|

||||

<img src="../images/embedding-custom-projection.png" alt="Custom projection" title="Custom projection" />

|

||||

<img src="https://www.tensorflow.org/images/embedding-custom-projection.png" alt="Custom projection" title="Custom projection" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -284,4 +284,4 @@ projection) as a small file. The Projector can then be pointed to a set of one

|

||||

or more of these files, producing the panel below. Other users can then walk

|

||||

through a sequence of bookmarks.

|

||||

|

||||

<img src="../images/embedding-bookmark.png" alt="Bookmark panel" style="width:300px;">

|

||||

<img src="https://www.tensorflow.org/images/embedding-bookmark.png" alt="Bookmark panel" style="width:300px;">

|

||||

|

||||

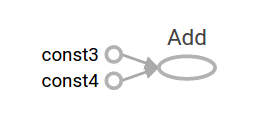

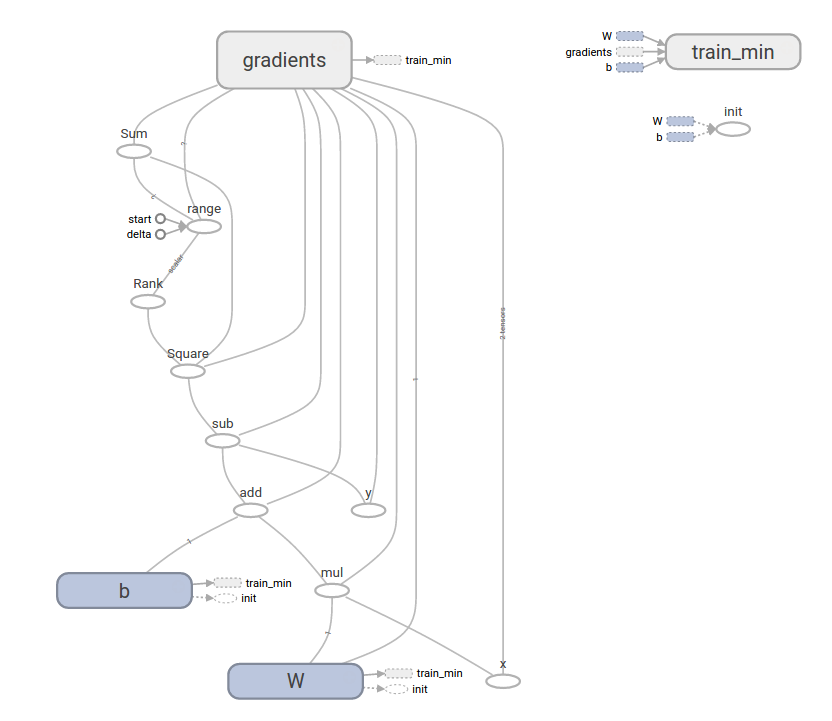

@ -123,7 +123,7 @@ TensorFlow provides a utility called TensorBoard that can display a picture of

|

||||

the computational graph. Here is a screenshot showing how TensorBoard

|

||||

visualizes the graph:

|

||||

|

||||

|

||||

|

||||

|

||||

As it stands, this graph is not especially interesting because it always

|

||||

produces a constant result. A graph can be parameterized to accept external

|

||||

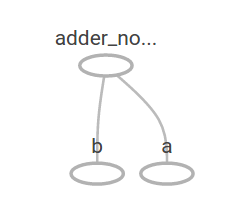

@ -154,7 +154,7 @@ resulting in the output

|

||||

|

||||

In TensorBoard, the graph looks like this:

|

||||

|

||||

|

||||

|

||||

|

||||

We can make the computational graph more complex by adding another operation.

|

||||

For example,

|

||||

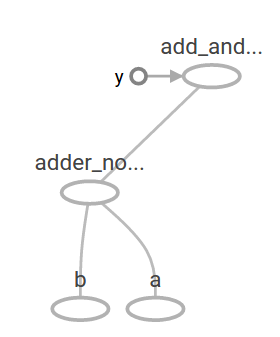

@ -170,7 +170,7 @@ produces the output

|

||||

|

||||

The preceding computational graph would look as follows in TensorBoard:

|

||||

|

||||

|

||||

|

||||

|

||||

In machine learning we will typically want a model that can take arbitrary

|

||||

inputs, such as the one above. To make the model trainable, we need to be able

|

||||

@ -336,7 +336,7 @@ program your loss will not be exactly the same, because the model is initialized

|

||||

with random values.

|

||||

|

||||

This more complicated program can still be visualized in TensorBoard

|

||||

|

||||

|

||||

|

||||

## `tf.contrib.learn`

|

||||

|

||||

|

||||

@ -2,7 +2,7 @@

|

||||

|

||||

TensorFlow computation graphs are powerful but complicated. The graph visualization can help you understand and debug them. Here's an example of the visualization at work.

|

||||

|

||||

|

||||

|

||||

*Visualization of a TensorFlow graph.*

|

||||

|

||||

To see your own graph, run TensorBoard pointing it to the log directory of the job, click on the graph tab on the top pane and select the appropriate run using the menu at the upper left corner. For in depth information on how to run TensorBoard and make sure you are logging all the necessary information, see @{$summaries_and_tensorboard$TensorBoard: Visualizing Learning}.

|

||||

@ -43,10 +43,10 @@ expanded states.

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/pool1_collapsed.png" alt="Unexpanded name scope" title="Unexpanded name scope" />

|

||||

<img src="https://www.tensorflow.org/images/pool1_collapsed.png" alt="Unexpanded name scope" title="Unexpanded name scope" />

|

||||

</td>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/pool1_expanded.png" alt="Expanded name scope" title="Expanded name scope" />

|

||||

<img src="https://www.tensorflow.org/images/pool1_expanded.png" alt="Expanded name scope" title="Expanded name scope" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -87,10 +87,10 @@ and the auxiliary area.

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/conv_1.png" alt="conv_1 is part of the main graph" title="conv_1 is part of the main graph" />

|

||||

<img src="https://www.tensorflow.org/images/conv_1.png" alt="conv_1 is part of the main graph" title="conv_1 is part of the main graph" />

|

||||

</td>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/save.png" alt="save is extracted as auxiliary node" title="save is extracted as auxiliary node" />

|

||||

<img src="https://www.tensorflow.org/images/save.png" alt="save is extracted as auxiliary node" title="save is extracted as auxiliary node" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -114,10 +114,10 @@ specific set of nodes.

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/series.png" alt="Sequence of nodes" title="Sequence of nodes" />

|

||||

<img src="https://www.tensorflow.org/images/series.png" alt="Sequence of nodes" title="Sequence of nodes" />

|

||||

</td>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/series_expanded.png" alt="Expanded sequence of nodes" title="Expanded sequence of nodes" />

|

||||

<img src="https://www.tensorflow.org/images/series_expanded.png" alt="Expanded sequence of nodes" title="Expanded sequence of nodes" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -135,15 +135,15 @@ for constants and summary nodes. To summarize, here's a table of node symbols:

|

||||

|

||||

Symbol | Meaning

|

||||

--- | ---

|

||||

| *High-level* node representing a name scope. Double-click to expand a high-level node.

|

||||

| Sequence of numbered nodes that are not connected to each other.

|

||||

| Sequence of numbered nodes that are connected to each other.

|

||||

| An individual operation node.

|

||||

| A constant.

|

||||

| A summary node.

|

||||

| Edge showing the data flow between operations.

|

||||

| Edge showing the control dependency between operations.

|

||||

| A reference edge showing that the outgoing operation node can mutate the incoming tensor.

|

||||

| *High-level* node representing a name scope. Double-click to expand a high-level node.

|

||||

| Sequence of numbered nodes that are not connected to each other.

|

||||

| Sequence of numbered nodes that are connected to each other.

|

||||

| An individual operation node.

|

||||

| A constant.

|

||||

| A summary node.

|

||||

| Edge showing the data flow between operations.

|

||||

| Edge showing the control dependency between operations.

|

||||

| A reference edge showing that the outgoing operation node can mutate the incoming tensor.

|

||||

|

||||

## Interaction {#interaction}

|

||||

|

||||

@ -161,10 +161,10 @@ right corner of the visualization.

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/infocard.png" alt="Info card of a name scope" title="Info card of a name scope" />

|

||||

<img src="https://www.tensorflow.org/images/infocard.png" alt="Info card of a name scope" title="Info card of a name scope" />

|

||||

</td>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/infocard_op.png" alt="Info card of operation node" title="Info card of operation node" />

|

||||

<img src="https://www.tensorflow.org/images/infocard_op.png" alt="Info card of operation node" title="Info card of operation node" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -207,10 +207,10 @@ The images below give an illustration for a piece of a real-life graph.

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/colorby_structure.png" alt="Color by structure" title="Color by structure" />

|

||||

<img src="https://www.tensorflow.org/images/colorby_structure.png" alt="Color by structure" title="Color by structure" />

|

||||

</td>

|

||||

<td style="width: 50%;">

|

||||

<img src="../images/colorby_device.png" alt="Color by device" title="Color by device" />

|

||||

<img src="https://www.tensorflow.org/images/colorby_device.png" alt="Color by device" title="Color by device" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -233,7 +233,7 @@ The images below show the CIFAR-10 model with tensor shape information:

|

||||

<table width="100%;">

|

||||

<tr>

|

||||

<td style="width: 100%;">

|

||||

<img src="../images/tensor_shapes.png" alt="CIFAR-10 model with tensor shape information" title="CIFAR-10 model with tensor shape information" />

|

||||

<img src="https://www.tensorflow.org/images/tensor_shapes.png" alt="CIFAR-10 model with tensor shape information" title="CIFAR-10 model with tensor shape information" />

|

||||

</td>

|

||||

</tr>

|

||||

<tr>

|

||||

@ -303,13 +303,13 @@ tensor output sizes.

|

||||

<table width="100%;">

|

||||

<tr style="height: 380px">

|

||||

<td>

|

||||

<img src="../images/colorby_compute_time.png" alt="Color by compute time" title="Color by compute time"/>

|

||||

<img src="https://www.tensorflow.org/images/colorby_compute_time.png" alt="Color by compute time" title="Color by compute time"/>

|

||||

</td>

|

||||

<td>

|

||||

<img src="../images/run_metadata_graph.png" alt="Run metadata graph" title="Run metadata graph" />

|

||||

<img src="https://www.tensorflow.org/images/run_metadata_graph.png" alt="Run metadata graph" title="Run metadata graph" />

|

||||

</td>

|

||||

<td>

|

||||

<img src="../images/run_metadata_infocard.png" alt="Run metadata info card" title="Run metadata info card" />

|

||||

<img src="https://www.tensorflow.org/images/run_metadata_infocard.png" alt="Run metadata info card" title="Run metadata info card" />

|

||||

</td>

|

||||

</tr>

|

||||

</table>

|

||||

|

||||

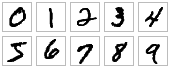

@ -15,7 +15,7 @@ MNIST is a simple computer vision dataset. It consists of images of handwritten

|

||||

digits like these:

|

||||

|

||||

<div style="width:40%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/MNIST.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/MNIST.png">

|

||||

</div>

|

||||

|

||||

It also includes labels for each image, telling us which digit it is. For

|

||||

@ -88,7 +88,7 @@ Each image is 28 pixels by 28 pixels. We can interpret this as a big array of

|

||||

numbers:

|

||||

|

||||

<div style="width:50%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/MNIST-Matrix.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/MNIST-Matrix.png">

|

||||

</div>

|

||||

|

||||

We can flatten this array into a vector of 28x28 = 784 numbers. It doesn't

|

||||

@ -110,7 +110,7 @@ Each entry in the tensor is a pixel intensity between 0 and 1, for a particular

|

||||

pixel in a particular image.

|

||||

|

||||

<div style="width:40%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/mnist-train-xs.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/mnist-train-xs.png">

|

||||

</div>

|

||||

|

||||

Each image in MNIST has a corresponding label, a number between 0 and 9

|

||||

@ -124,7 +124,7 @@ vector which is 1 in the \\(n\\)th dimension. For example, 3 would be

|

||||

`[55000, 10]` array of floats.

|

||||

|

||||

<div style="width:40%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/mnist-train-ys.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/mnist-train-ys.png">

|

||||

</div>

|

||||

|

||||

We're now ready to actually make our model!

|

||||

@ -157,7 +157,7 @@ classes. Red represents negative weights, while blue represents positive

|

||||

weights.

|

||||

|

||||

<div style="width:40%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/softmax-weights.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/softmax-weights.png">

|

||||

</div>

|

||||

|

||||

We also add some extra evidence called a bias. Basically, we want to be able

|

||||

@ -202,13 +202,13 @@ although with a lot more \\(x\\)s. For each output, we compute a weighted sum of

|

||||

the \\(x\\)s, add a bias, and then apply softmax.

|

||||

|

||||

<div style="width:55%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/softmax-regression-scalargraph.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/softmax-regression-scalargraph.png">

|

||||

</div>

|

||||

|

||||

If we write that out as equations, we get:

|

||||

|

||||

<div style="width:52%; margin-left:25%; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/softmax-regression-scalarequation.png"

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/softmax-regression-scalarequation.png"

|

||||

alt="[y1, y2, y3] = softmax(W11*x1 + W12*x2 + W13*x3 + b1, W21*x1 + W22*x2 + W23*x3 + b2, W31*x1 + W32*x2 + W33*x3 + b3)">

|

||||

</div>

|

||||

|

||||

@ -217,7 +217,7 @@ and vector addition. This is helpful for computational efficiency. (It's also

|

||||

a useful way to think.)

|

||||

|

||||

<div style="width:50%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/softmax-regression-vectorequation.png"

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/softmax-regression-vectorequation.png"

|

||||

alt="[y1, y2, y3] = softmax([[W11, W12, W13], [W21, W22, W23], [W31, W32, W33]]*[x1, x2, x3] + [b1, b2, b3])">

|

||||

</div>

|

||||

|

||||

|

||||

@ -34,7 +34,7 @@ MNIST is a classic problem in machine learning. The problem is to look at

|

||||

greyscale 28x28 pixel images of handwritten digits and determine which digit

|

||||

the image represents, for all the digits from zero to nine.

|

||||

|

||||

|

||||

|

||||

|

||||

For more information, refer to [Yann LeCun's MNIST page](http://yann.lecun.com/exdb/mnist/)

|

||||

or [Chris Olah's visualizations of MNIST](http://colah.github.io/posts/2014-10-Visualizing-MNIST/).

|

||||

@ -90,7 +90,7 @@ loss.

|

||||

and apply gradients.

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/mnist_subgraph.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/mnist_subgraph.png">

|

||||

</div>

|

||||

|

||||

### Inference

|

||||

@ -384,7 +384,7 @@ summary_writer.add_summary(summary_str, step)

|

||||

When the events files are written, TensorBoard may be run against the training

|

||||

folder to display the values from the summaries.

|

||||

|

||||

|

||||

|

||||

|

||||

**NOTE**: For more info about how to build and run Tensorboard, please see the accompanying tutorial @{$summaries_and_tensorboard$Tensorboard: Visualizing Learning}.

|

||||

|

||||

|

||||

@ -401,6 +401,6 @@ Then navigate to `http://0.0.0.0:`*`<port_number>`* in your browser, where

|

||||

If you click on the accuracy field, you'll see an image like the following,

|

||||

which shows accuracy plotted against step count:

|

||||

|

||||

|

||||

|

||||

|

||||

For more on using TensorBoard, see @{$summaries_and_tensorboard$TensorBoard: Visualizing Learning} and @{$graph_viz$TensorBoard: Graph Visualization}.

|

||||

|

||||

@ -8,7 +8,7 @@ your TensorFlow graph, plot quantitative metrics about the execution of your

|

||||

graph, and show additional data like images that pass through it. When

|

||||

TensorBoard is fully configured, it looks like this:

|

||||

|

||||

|

||||

|

||||

|

||||

<div class="video-wrapper">

|

||||

<iframe class="devsite-embedded-youtube-video" data-video-id="eBbEDRsCmv4"

|

||||

|

||||

@ -118,7 +118,7 @@ The [Iris data set](https://en.wikipedia.org/wiki/Iris_flower_data_set) contains

|

||||

150 rows of data, comprising 50 samples from each of three related Iris species:

|

||||

*Iris setosa*, *Iris virginica*, and *Iris versicolor*.

|

||||

|

||||

**From left to right,

|

||||

**From left to right,

|

||||

[*Iris setosa*](https://commons.wikimedia.org/w/index.php?curid=170298) (by

|

||||

[Radomil](https://commons.wikimedia.org/wiki/User:Radomil), CC BY-SA 3.0),

|

||||

[*Iris versicolor*](https://commons.wikimedia.org/w/index.php?curid=248095) (by

|

||||

|

||||

@ -143,13 +143,13 @@ conversion functions before and after to move the data between float and

|

||||

eight-bit. Below is an example of what they look like. First here's the original

|

||||

Relu operation, with float inputs and outputs:

|

||||

|

||||

|

||||

|

||||

|

||||

Then, this is the equivalent converted subgraph, still with float inputs and

|

||||

outputs, but with internal conversions so the calculations are done in eight

|

||||

bit.

|

||||

|

||||

|

||||

|

||||

|

||||

The min and max operations actually look at the values in the input float

|

||||

tensor, and then feeds them into the Dequantize operation that converts the

|

||||

@ -162,7 +162,7 @@ operations that all have float equivalents, then there will be a lot of adjacent

|

||||

Dequantize/Quantize ops. This stage spots that pattern, recognizes that they

|

||||

cancel each other out, and removes them, like this:

|

||||

|

||||

|

||||

|

||||

|

||||

Applied on a large scale to models where all of the operations have quantized

|

||||

equivalents, this gives a graph where all of the tensor calculations are done in

|

||||

|

||||

@ -62,7 +62,7 @@ well as the NVIDIA GPU backend are in the TensorFlow source tree.

|

||||

The following diagram shows the compilation process in XLA:

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img src="../../images/how-does-xla-work.png">

|

||||

<img src="https://www.tensorflow.org/images/how-does-xla-work.png">

|

||||

</div>

|

||||

|

||||

XLA comes with several optimizations and analyses that are target-independent,

|

||||

|

||||

@ -124,7 +124,7 @@ open the timeline file created when the script finishes: `timeline.ctf.json`.

|

||||

The rendered timeline should look similar to the picture below with multiple

|

||||

green boxes labeled `MatMul`, possibly across multiple CPUs.

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/jit_timeline_gpu.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/jit_timeline_gpu.png">

|

||||

</div>

|

||||

|

||||

### Step #3 Run with XLA

|

||||

@ -139,7 +139,7 @@ TF_XLA_FLAGS=--xla_generate_hlo_graph=.* python mnist_softmax_xla.py

|

||||

Open the timeline file created (`timeline.ctf.json`). The rendered timeline

|

||||

should look similar to the picture below with one long bar labeled `_XlaLaunch`.

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/jit_timeline_gpu_xla.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/jit_timeline_gpu_xla.png">

|

||||

</div>

|

||||

|

||||

To understand what is happening in `_XlaLaunch`, look at the console output for

|

||||

@ -165,5 +165,5 @@ dot -Tpng hlo_graph_80.dot -o hlo_graph_80.png

|

||||

|

||||

The result will look like the following:

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/jit_gpu_xla_graph.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/jit_gpu_xla_graph.png">

|

||||

</div>

|

||||

|

||||

@ -178,7 +178,7 @@ Concat({a, b}, 0)

|

||||

|

||||

Diagram:

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ops_concatenate.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ops_concatenate.png">

|

||||

</div>

|

||||

|

||||

## ConvertElementType

|

||||

@ -707,7 +707,7 @@ are all 0. Figure below shows examples of different `edge_padding` and

|

||||

`interior_padding` values for a two dimensional array.

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ops_pad.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ops_pad.png">

|

||||

</div>

|

||||

|

||||

## Reduce

|

||||

@ -781,13 +781,13 @@ Here's an example of reducing a 2D array (matrix). The shape has rank 2,

|

||||

dimension 0 of size 2 and dimension 1 of size 3:

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:35%" src="../../images/ops_2d_matrix.png">

|

||||

<img style="width:35%" src="https://www.tensorflow.org/images/ops_2d_matrix.png">

|

||||

</div>

|

||||

|

||||

Results of reducing dimensions 0 or 1 with an "add" function:

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:35%" src="../../images/ops_reduce_from_2d_matrix.png">

|

||||

<img style="width:35%" src="https://www.tensorflow.org/images/ops_reduce_from_2d_matrix.png">

|

||||

</div>

|

||||

|

||||

Note that both reduction results are 1D arrays. The diagram shows one as column

|

||||

@ -798,7 +798,7 @@ size 4, dimension 1 of size 2 and dimension 2 of size 3. For simplicity, the

|

||||

values 1 to 6 are replicated across dimension 0.

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:35%" src="../../images/ops_reduce_from_3d_matrix.png">

|

||||

<img style="width:35%" src="https://www.tensorflow.org/images/ops_reduce_from_3d_matrix.png">

|

||||

</div>

|

||||

|

||||

Similarly to the 2D example, we can reduce just one dimension. If we reduce

|

||||

@ -890,7 +890,7 @@ builder.ReduceWindow(

|

||||

```

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:35%" src="../../images/ops_reduce_window.png">

|

||||

<img style="width:35%" src="https://www.tensorflow.org/images/ops_reduce_window.png">

|

||||

</div>

|

||||

|

||||

Stride of 1 in a dimension specifies that the position of a window in the

|

||||

@ -902,7 +902,7 @@ are the same as though the input came in with the dimensions it has after

|

||||

padding.

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:75%" src="../../images/ops_reduce_window_stride.png">

|

||||

<img style="width:75%" src="https://www.tensorflow.org/images/ops_reduce_window_stride.png">

|

||||

</div>

|

||||

|

||||

The evaluation order of the reduction function is arbitrary and may be

|

||||

@ -1144,7 +1144,7 @@ addition `scatter` function produces the output element of value 8 (2 + 6).

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%"

|

||||

src="../../images/ops_scatter_to_selected_window_element.png">

|

||||

src="https://www.tensorflow.org/images/ops_scatter_to_selected_window_element.png">

|

||||

</div>

|

||||

|

||||

The evaluation order of the `scatter` function is arbitrary and may be

|

||||

@ -1482,5 +1482,5 @@ while (result(0) < 1000) {

|

||||

```

|

||||

|

||||

<div style="width:95%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../../images/ops_while.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/ops_while.png">

|

||||

</div>

|

||||

|

||||

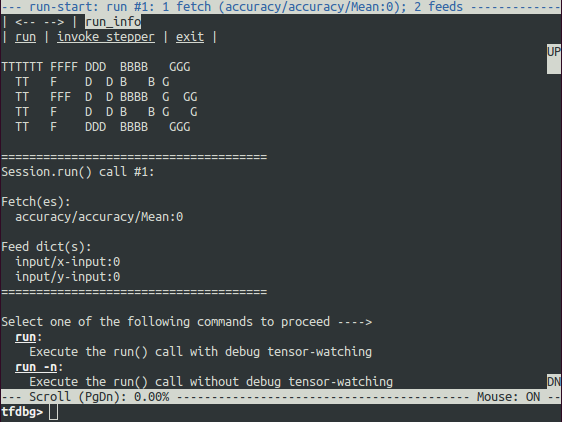

@ -24,7 +24,7 @@ This code trains a simple NN for MNIST digit image recognition. Notice that the

|

||||

accuracy increases slightly after the first training step, but then gets stuck

|

||||

at a low (near-chance) level:

|

||||

|

||||

|

||||

|

||||

|

||||

Scratching your head, you suspect that certain nodes in the training graph

|

||||

generated bad numeric values such as `inf`s and `nan`s. The computation-graph

|

||||

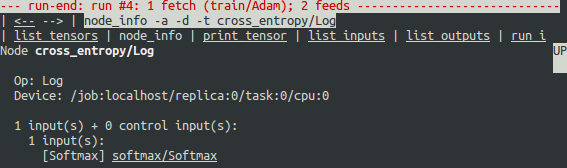

@ -89,7 +89,7 @@ The debug wrapper session will prompt you when it is about to execute the first

|

||||

`run()` call, with information regarding the fetched tensor and feed

|

||||

dictionaries displayed on the screen.

|

||||

|

||||

|

||||

|

||||

|

||||

This is what we refer to as the *run-start UI*. If the screen size is

|

||||

too small to display the content of the message in its entirety, you can resize

|

||||

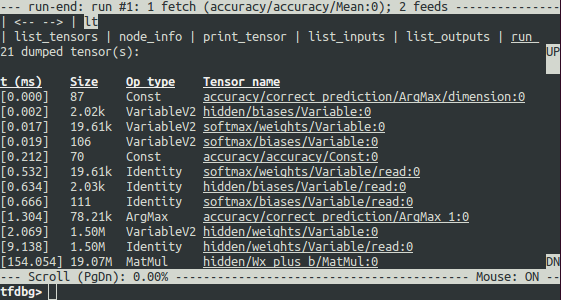

@ -108,7 +108,7 @@ intermediate tensors from the run. (These tensors can also be obtained by

|

||||

running the command `lt` after you executed `run`.) This is called the

|

||||

**run-end UI**:

|

||||

|

||||

|

||||

|

||||

|

||||

### tfdbg CLI Frequently-Used Commands

|

||||

|

||||

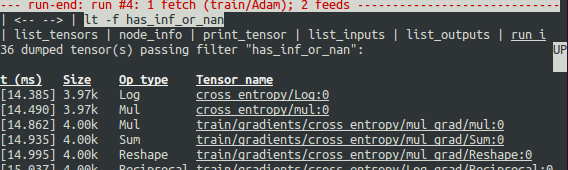

@ -181,7 +181,7 @@ screen with a red-colored title line indicating **tfdbg** stopped immediately

|

||||

after a `run()` call generated intermediate tensors that passed the specified

|

||||

filter `has_inf_or_nan`:

|

||||

|

||||

|

||||

|

||||

|

||||

As the screen display indicates, the `has_inf_or_nan` filter is first passed

|

||||

during the fourth `run()` call: an [Adam optimizer](https://arxiv.org/abs/1412.6980)

|

||||

@ -220,7 +220,7 @@ item on the top or entering the equivalent command:

|

||||

tfdbg> ni cross_entropy/Log

|

||||

```

|

||||

|

||||

|

||||

|

||||

|

||||

You can see that this node has the op type `Log`

|

||||

and that its input is the node `softmax/Softmax`. Run the following command to

|

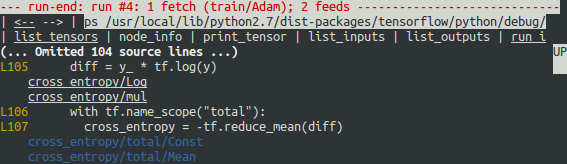

||||

@ -263,7 +263,7 @@ simply click the underlined line numbers in the stack trace output of the

|

||||

`ni -t <op_name>` commands, or use the `ps` (or `print_source`) command such as:

|

||||

`ps /path/to/source.py`. See the screenshot below for an example of `ps` output:

|

||||

|

||||

|

||||

|

||||

|

||||

Apply a value clipping on the input to @{tf.log}

|

||||

to resolve this problem:

|

||||

|

||||

@ -309,7 +309,7 @@ operations, so that our training loop can dequeue examples from the example

|

||||

queue.

|

||||

|

||||

<div style="width:70%; margin-left:12%; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../images/AnimatedFileQueues.gif">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/AnimatedFileQueues.gif">

|

||||

</div>

|

||||

|

||||

The helpers in `tf.train` that create these queues and enqueuing operations add

|

||||

|

||||

@ -14,7 +14,7 @@ that takes an item off the queue, adds one to that item, and puts it back on the

|

||||

end of the queue. Slowly, the numbers on the queue increase.

|

||||

|

||||

<div style="width:70%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../images/IncremeterFifoQueue.gif">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/IncremeterFifoQueue.gif">

|

||||

</div>

|

||||

|

||||

`Enqueue`, `EnqueueMany`, and `Dequeue` are special nodes. They take a pointer

|

||||

|

||||

@ -141,7 +141,7 @@ so that we may visualize them in @{$summaries_and_tensorboard$TensorBoard}.

|

||||

This is a good practice to verify that inputs are built correctly.

|

||||

|

||||

<div style="width:50%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:70%" src="../images/cifar_image_summary.png">

|

||||

<img style="width:70%" src="https://www.tensorflow.org/images/cifar_image_summary.png">

|

||||

</div>

|

||||

|

||||

Reading images from disk and distorting them can use a non-trivial amount of

|

||||

@ -170,7 +170,7 @@ Layer Name | Description

|

||||

Here is a graph generated from TensorBoard describing the inference operation:

|

||||

|

||||

<div style="width:15%; margin:auto; margin-bottom:10px; margin-top:20px;">

|

||||

<img style="width:100%" src="../images/cifar_graph.png">

|

||||

<img style="width:100%" src="https://www.tensorflow.org/images/cifar_graph.png">

|

||||

</div>

|

||||

|

||||

> **EXERCISE**: The output of `inference` are un-normalized logits. Try editing

|

||||