docs: Overhaul AI documentation (#29747)

To support the Agentic Editing launch. To dos before merging:

- [ ] Anything marked as `todo!` within `docs/src` (Anyone)

- [x] Check all internal links (Joe)

- Joe: I checked all links and fixed all aside from a few that I

annotated with `todo!` comments

- [ ] Update images (Danilo)

- [ ] Go over / show images of tool cards in agent panel overview

(Danilo)

- [ ] Point billing FAQ to new billing docs (Joe)

- [x] Redirects external links

- [ ] Needs testing

- [x] Delete old docs

- [ ] Ensure all mentioned bindings use the `{#kb ...}` format and that

they are rendering correctly

- [ ] All agent-related actions are now `agent::` and not `assistant::`

- [x] Mention support of `.rules` files in `rules.md`

Release Notes:

- N/A

---------

Co-authored-by: Joseph T. Lyons <josephtlyons@gmail.com>

Co-authored-by: morgankrey <morgankrey@gmail.com>

Co-authored-by: Smit Barmase <37347831+smitbarmase@users.noreply.github.com>

Co-authored-by: Ben Kunkle <Ben.kunkle@gmail.com>

Co-authored-by: Peter Tripp <peter@zed.dev>

Co-authored-by: Ben Kunkle <ben@zed.dev>

Co-authored-by: Mikayla Maki <mikayla.c.maki@gmail.com>

Co-authored-by: Ben Brandt <benjamin.j.brandt@gmail.com>

This commit is contained in:

parent

38975586d4

commit

795fadc0bc

@ -4,12 +4,14 @@ Welcome to Zed's documentation.

|

||||

|

||||

This is built on push to `main` and published automatically to [https://zed.dev/docs](https://zed.dev/docs).

|

||||

|

||||

To preview the docs locally you will need to install [mdBook](https://rust-lang.github.io/mdBook/) (`cargo install mdbook`) and then run:

|

||||

To preview the docs locally you will need to install [mdBook](https://rust-lang.github.io/mdBook/) (`cargo install mdbook@0.4.40`) and then run:

|

||||

|

||||

```sh

|

||||

mdbook serve docs

|

||||

```

|

||||

|

||||

It's important to note the version number above. For an unknown reason, as of 2025-04-23, running 0.4.48 will cause odd URL behavior that breaks docs.

|

||||

|

||||

Before committing, verify that the docs are formatted in the way prettier expects with:

|

||||

|

||||

```

|

||||

|

||||

@ -25,6 +25,17 @@ enable = false

|

||||

"/assistant.html" = "/docs/assistant/assistant.html"

|

||||

"/developing-zed.html" = "/docs/development.html"

|

||||

"/conversations.html" = "/community-links"

|

||||

"/assistant/assistant.html" = "/ai/ai.html"

|

||||

"/assistant/configuration.html" = "/ai/configuration.html"

|

||||

"/assistant/assistant-panel.html" = "/ai/agent-panel.html"

|

||||

"/assistant/contexts.html" = "/ai/text-threads.html"

|

||||

"/assistant/inline-assistant.html" = "/ai/inline-assistant.html"

|

||||

"/assistant/commands.html" = "/ai/text-threads.html"

|

||||

"/assistant/prompting.html" = "/ai/rules.html"

|

||||

"/assistant/context-servers.html" = "/ai/mcp.html"

|

||||

"/assistant/model-context-protocol.html" = "/ai/mcp.html"

|

||||

"/model-improvement.html" = "/ai/ai-improvement.html"

|

||||

|

||||

|

||||

# Our custom preprocessor for expanding commands like `{#kb action::ActionName}`,

|

||||

# and other docs-related functions.

|

||||

|

||||

@ -37,18 +37,24 @@

|

||||

- [Environment Variables](./environment.md)

|

||||

- [REPL](./repl.md)

|

||||

|

||||

# Assistant

|

||||

# AI

|

||||

|

||||

- [Overview](./assistant/assistant.md)

|

||||

- [Configuration](./assistant/configuration.md)

|

||||

- [Assistant Panel](./assistant/assistant-panel.md)

|

||||

- [Contexts](./assistant/contexts.md)

|

||||

- [Inline Assistant](./assistant/inline-assistant.md)

|

||||

- [Commands](./assistant/commands.md)

|

||||

- [Prompts](./assistant/prompting.md)

|

||||

- [Context Servers](./assistant/context-servers.md)

|

||||

- [Model Context Protocol](./assistant/model-context-protocol.md)

|

||||

- [Model Improvement](./model-improvement.md)

|

||||

- [Overview](./ai/ai.md)

|

||||

- [Agent Panel](./ai/agent-panel.md)

|

||||

- [Tools](./ai/tools.md)

|

||||

- [Model Temperature](./ai/temperature.md)

|

||||

- [Inline Assistant](./ai/inline-assistant.md)

|

||||

- [Edit Prediction](./ai/edit-prediction.md)

|

||||

- [Text Threads](./ai/text-threads.md)

|

||||

- [Rules](./ai/rules.md)

|

||||

- [Model Context Protocol](./ai/mcp.md)

|

||||

- [Subscription](./ai/subscription.md)

|

||||

- [Plans and Usage](./ai/plans-and-usage.md)

|

||||

- [Billing](./ai/billing.md)

|

||||

- [Models](./ai/models.md)

|

||||

- [Use Your Own API Keys](./ai/custom-api-keys.md)

|

||||

- [Privacy and Security](./ai/privacy-and-security.md)

|

||||

- [AI Improvement](./ai/ai-improvement.md)

|

||||

|

||||

# Extensions

|

||||

|

||||

|

||||

158

docs/src/ai/agent-panel.md

Normal file

158

docs/src/ai/agent-panel.md

Normal file

@ -0,0 +1,158 @@

|

||||

# Agent Panel

|

||||

|

||||

The Agent Panel provides you with a way to interact with LLMs.

|

||||

You can use it for various tasks, such as generating code, asking questions about your code base, and general inquiries such as emails and documentation.

|

||||

|

||||

To open the Agent Panel, use the `agent: new thread` action in [the Command Palette](../getting-started.md#command-palette) or click the ✨ (sparkles) icon in the status bar.

|

||||

|

||||

If you're using the Agent Panel for the first time, you'll need to [configure at least one LLM provider](./configuration.md#providers).

|

||||

|

||||

## Overview

|

||||

|

||||

After you've configured some LLM providers, you're ready to start working with the Agent Panel.

|

||||

|

||||

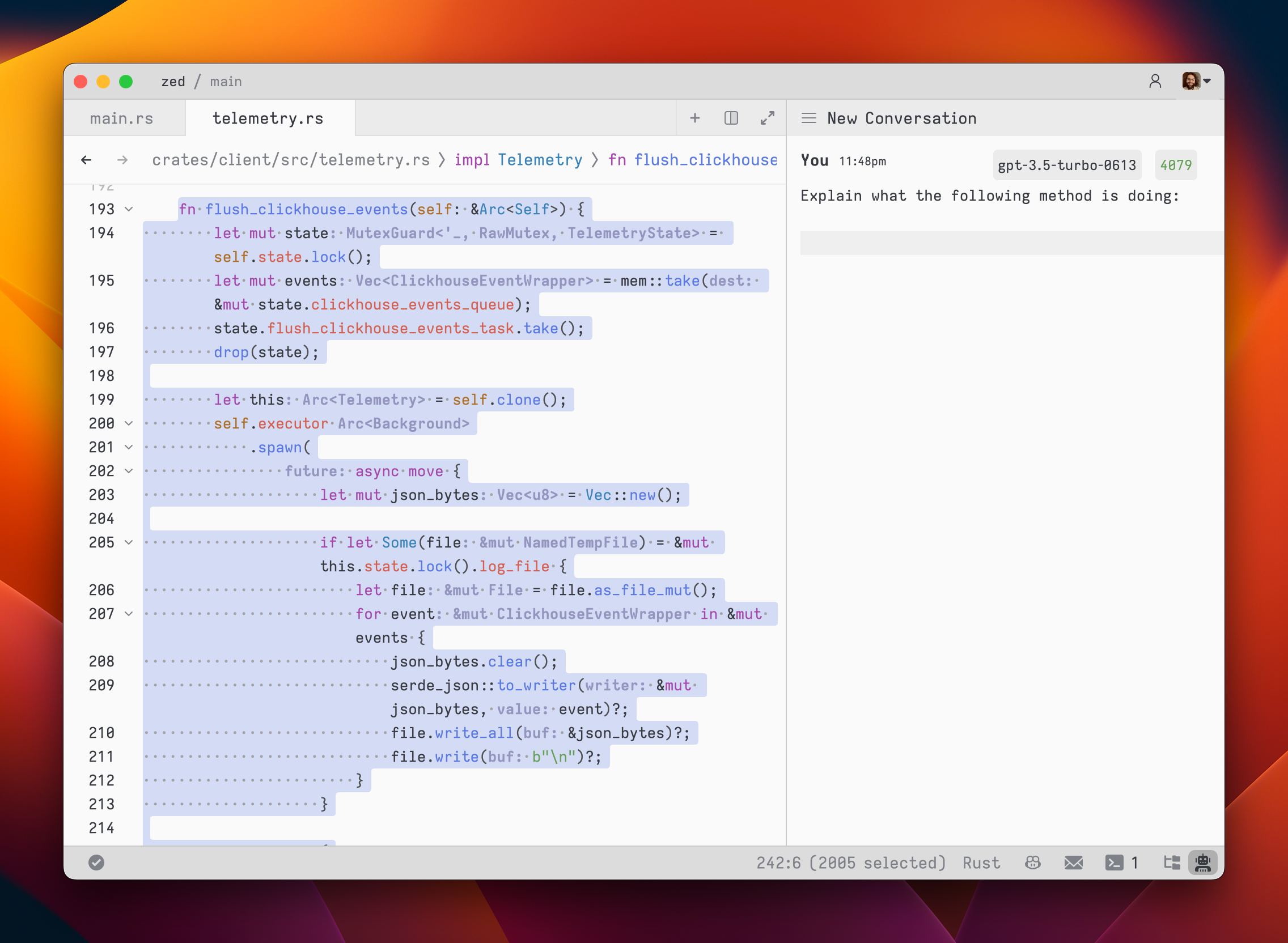

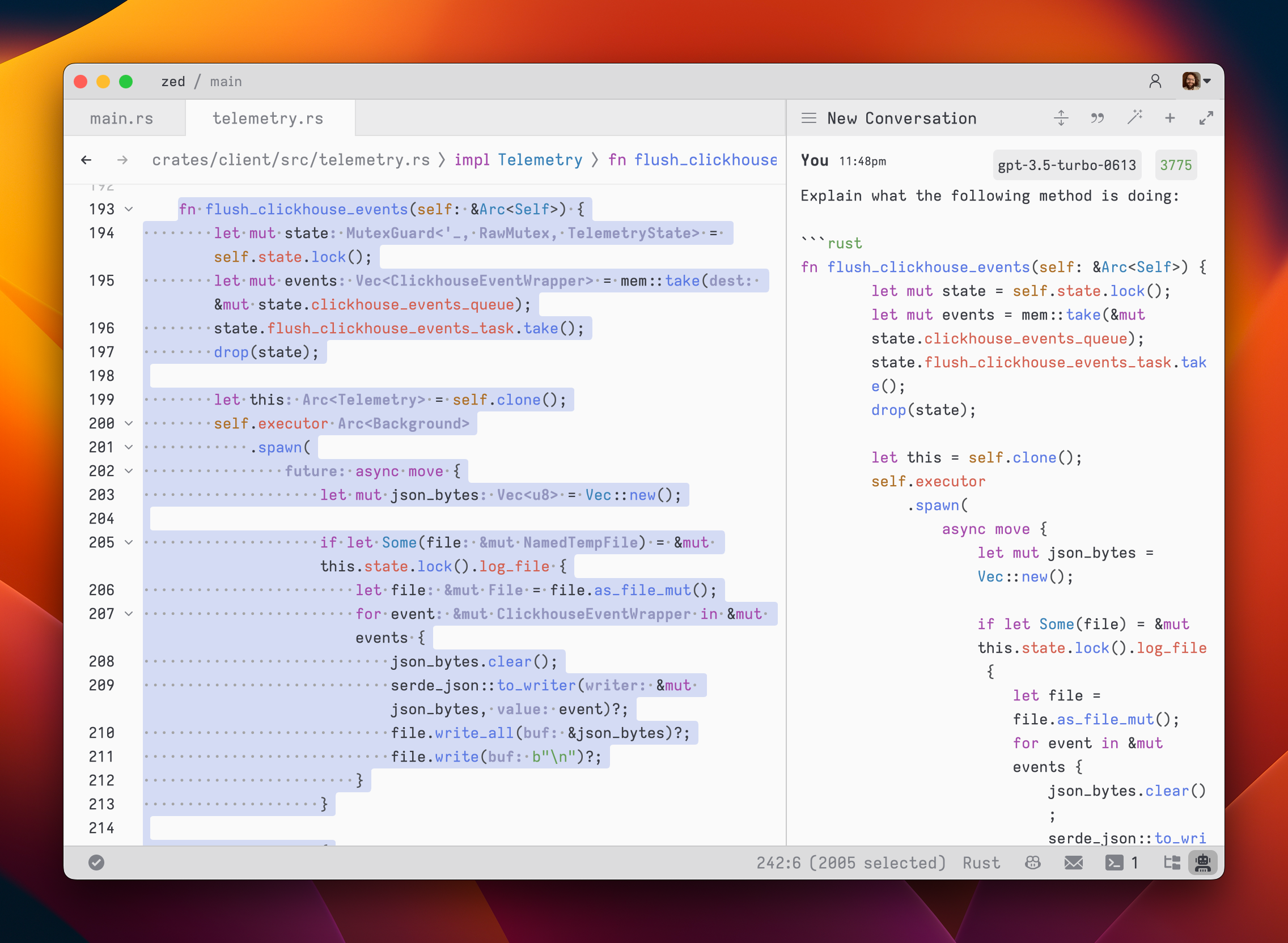

Type at the message editor and hit `enter` to submit your prompt to the LLM.

|

||||

If you need extra room to type, you can expand the message editor with {#kb agent::ExpandMessageEditor}.

|

||||

|

||||

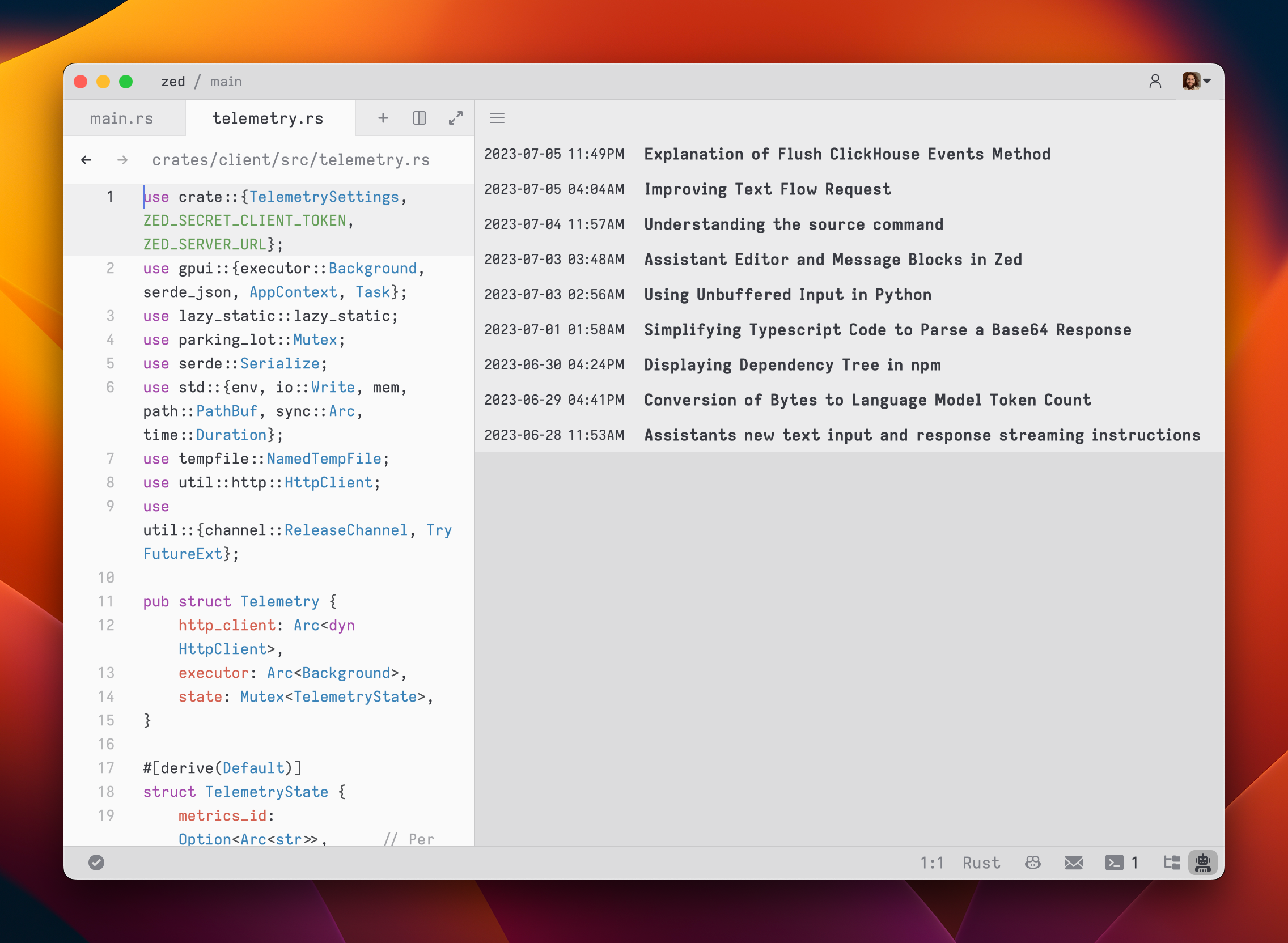

You should start to see the responses stream in with indications of which [tools](/ai/tools.md) the AI is using to fulfill your prompt.

|

||||

For example, if the AI chooses to perform an edit, you will see a card with the diff.

|

||||

|

||||

### Editing Messages

|

||||

|

||||

Any message that you send to the AI is editable.

|

||||

You can click on the card that contains your message and re-submit it with an adjusted prompt and/or new pieces of context.

|

||||

|

||||

### Checkpoints

|

||||

|

||||

Every time the AI performs an edit, you should see a "Restore Checkpoint" button to the top of your message.

|

||||

This allows you to return your code base to the state it was in prior to that message.

|

||||

This is usually valuable if the AI's edit doesn't go in the right direction.

|

||||

|

||||

### Navigating History

|

||||

|

||||

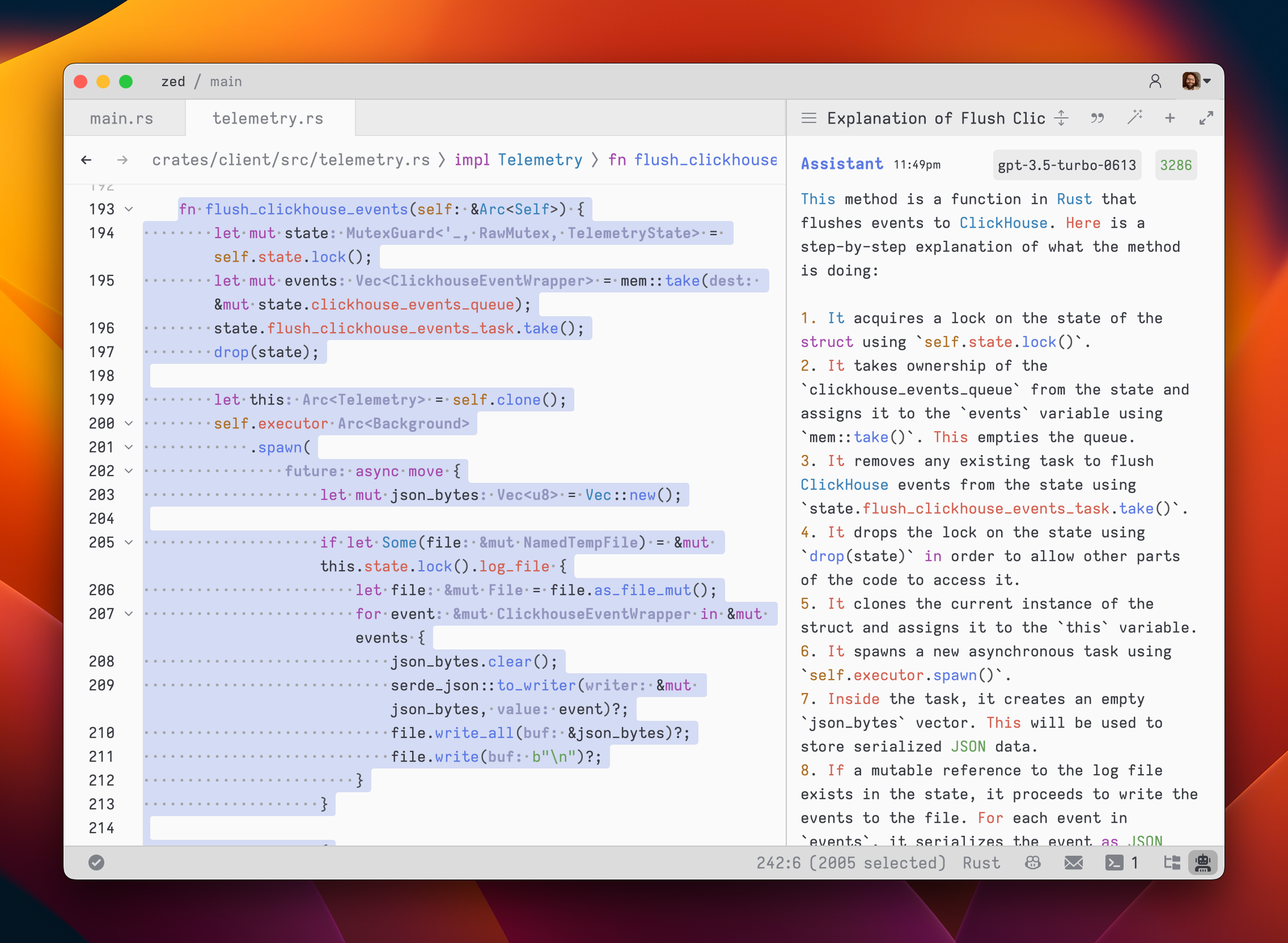

To quickly navigate through recently opened threads, use the {#kb agent::ToggleNavigationMenu} binding, when focused on the panel's editor, or click the hamburger icon button at the top left of the panel to open the dropdown that shows you the six most recent interactions with the LLM.

|

||||

|

||||

The items in this menu work similarly to tabs, and closing them from there doesn't delete the thread; just takes them out of the recent list.

|

||||

|

||||

You can also view all historical conversations with the `View All` option from within the same menu or by reaching for the {#kb agent::OpenHistory} binding.

|

||||

|

||||

### Reviewing Changes

|

||||

|

||||

If you are using a profile that includes write tools, and the agent has made changes to your project, you'll notice the Agent Panel surfaces the fact that edits have been applied.

|

||||

|

||||

You can click on the accordion bar that shows up right above the panel's editor see which files have been changed, or click `Review Changes` ({#kb agent::OpenAgentDiff}) to open a multi-buffer to review them.

|

||||

Reviewing includes the option to accept or reject each edit, or accept or reject all edits.

|

||||

|

||||

Diffs with changes also appear in individual buffers.

|

||||

So, if your active tab had changes added by the AI, you'll see diffs with the same accept/reject controls as in the multi-buffer.

|

||||

|

||||

## Adding Context

|

||||

|

||||

Although Zed's agent is very efficient at reading through your code base to autonomously pick up relevant files, directories, and other context, manually adding context is still usually encouraged as a way to speed up and improve the AI's response quality.

|

||||

|

||||

If you have a tab open when triggering the Agent Panel, that tab will appear as a suggested context in form of a dashed button.

|

||||

You can also add other forms of context, like files, rules, and directories, by either typing `@` or hitting the `+` icon button.

|

||||

|

||||

You can even add previous threads as context with the `@thread` command, or by selecting "Start new from summary" option from the top-right menu in the agent panel to continue a longer conversation and keep it within the size of context window.

|

||||

|

||||

Images are also supported, and pasting them over in the panel's editor works.

|

||||

|

||||

### Token Usage

|

||||

|

||||

Zed surfaces how many tokens you are consuming for your currently active thread in the panel's toolbar.

|

||||

Depending on how many pieces of context you add, your token consumption can grow rapidly.

|

||||

|

||||

With that in mind, once you get close to the model's context window, we'll display a banner on the bottom of the message editor offering to start a new thread with the current one summarized and added as context.

|

||||

You can also do this at any time with an ongoing thread via the "Agent Options" menu on the top right.

|

||||

|

||||

## Changing Models

|

||||

|

||||

After you've configured your LLM providers—either via [a custom API key](/ai/custom-api-keys.md) or through [Zed's hosted models](/ai/models.md)—you can switch between them by clicking on the model selector on the message editor or by using the {#kb agent::ToggleModelSelector} keybinding.

|

||||

|

||||

## Using Tools

|

||||

|

||||

The new Agent Panel supports tool calling, which enables agentic collaboration with AI.

|

||||

Zed comes with [several built-in tools](/ai/tools.md) that allow models to perform tasks such as searching through your codebase, editing files, running commands, and others.

|

||||

|

||||

You can also extend the set of available tools via [MCP Servers](/ai/mcp.md).

|

||||

|

||||

### Profiles

|

||||

|

||||

Profiles introduce a way to group tools.

|

||||

Zed offers three built-in profiles and you can create as many custom ones as you want.

|

||||

|

||||

#### Built-in Profiles

|

||||

|

||||

- `Write`: A profile with tools to allow the LLM to write to your files and run terminal commands. This one essentially has all built-in tools turned on.

|

||||

- `Ask`: A profile with read-only tools. Best for asking questions about your code base without the fear of the agent making changes.

|

||||

- `Minimal`: A profile with no tools. Best for general conversations with the LLM where no knowledge of your code is necessary.

|

||||

|

||||

You can explore the exact tools enabled in each profile by clicking on the profile selector button > `Configure Profiles…` > the one you want to check out.

|

||||

|

||||

#### Custom Profiles

|

||||

|

||||

You may find yourself in a situation where the built-in profiles don't quite fit your specific needs.

|

||||

Zed's Agent Panel allows for building custom profiles.

|

||||

|

||||

You can create new profile via the `Configure Profiles…` option in the profile selector.

|

||||

From here, you can choose to `Add New Profile` or fork an existing one with your choice of tools and a custom profile name.

|

||||

|

||||

You can also override built-in profiles.

|

||||

With a built-in profile selected, in the profile selector, navigate to `Configure Tools`, and select the tools you'd like.

|

||||

|

||||

Zed will store this profile in your settings using the same profile name as the default you overrode.

|

||||

|

||||

All custom profiles can be edited via the UI or by hand under the `assistant.profiles` key in your `settings.json` file.

|

||||

|

||||

### Model Support

|

||||

|

||||

Tool calling needs to be individually supported by each model and model provider.

|

||||

Therefore, despite the presence of tools, some models may not have the ability to pick them up yet in Zed.

|

||||

You should see a "No tools" disabled button if you select a model that falls into this case.

|

||||

|

||||

We want to support all of them, though!

|

||||

We may prioritize which ones to focus on based on popularity and user feedback, so feel free to help and contribute.

|

||||

|

||||

All [Zed's hosted models](./models.md) support tool calling out-of-the-box.

|

||||

|

||||

### MCP Servers

|

||||

|

||||

Similarly to the built-in tools, some models may not support all tools included in a given MCP Server.

|

||||

Zed's UI will inform about this via a warning icon that appears close to the model selector.

|

||||

|

||||

## Text Threads

|

||||

|

||||

["Text threads"](./text-threads.md) present your conversation with the LLM in a different format—as raw text.

|

||||

With text threads, you have full control over the conversation data.

|

||||

You can remove and edit responses from the LLM, swap roles, and include more context earlier in the conversation.

|

||||

|

||||

For users who have been with us for some time, you'll notice that text threads are our original assistant panel—users love it for the control it offers.

|

||||

We do not plan to deprecate text threads, but it should be noted that if you want the AI to write to your code base autonomously, that's only available in the newer, and now default, "Threads".

|

||||

|

||||

### Text Thread History

|

||||

|

||||

Content from text thread are saved to your file system.

|

||||

Visit [the dedicated docs](./text-threads.md#history) for more info.

|

||||

|

||||

## Errors and Debugging

|

||||

|

||||

In case of any error or strange LLM response behavior, the best way to help the Zed team debug is by reaching for the `agent: open thread as markdown` action and attaching that data as part of your issue on GitHub.

|

||||

|

||||

This action exposes the entire thread in the form of Markdown and allows for deeper understanding of what each tool call was doing.

|

||||

|

||||

You can also open threads as Markdown by clicking on the file icon button, to the right of the thumbs down button, when focused on the panel's editor.

|

||||

|

||||

## Feedback

|

||||

|

||||

Every change we make to Zed's system prompt and tool set, needs to be backed by an eval with good scores.

|

||||

|

||||

Every time the LLM performs a weird change or investigates a certain topic in your codebase completely incorrectly, it's an indication that there's an improvement opportunity.

|

||||

|

||||

> Note that rating responses will send your data related to that response to Zed's servers.

|

||||

> See [AI Improvement](./ai-improvement.md) and [Privacy and Security](/ai/privacy-and-security.md) for more information about Zed's approach to AI improvement, privacy, and security.

|

||||

> **_If you don't want data persisted on Zed's servers, don't rate_**. We will not collect data for improving our Agentic offering without you explicitly rating responses.

|

||||

|

||||

The best way you can help influence the next change to Zed's system prompt and tools is by rating the LLM's response via the thumbs up/down buttons at the end of every response.

|

||||

In case of a thumbs down, a new text area will show up where you can add more specifics about what happened.

|

||||

|

||||

You can provide feedback on the thread at any point after the agent responds, and multiple times within the same thread.

|

||||

@ -1,16 +1,36 @@

|

||||

# Zed Model Improvement

|

||||

# Zed AI Improvement

|

||||

|

||||

## Zed Assistant

|

||||

## Agent Panel

|

||||

|

||||

When using the Zed Assistant, Zed does not persistently store user content or use user content for training of its models.

|

||||

### Opt-In

|

||||

|

||||

When using upstream services through Zed AI, we require similar assurances from our service providers. For example, usage of Anthropic Claude 3.5 via Zed AI in the Assistant is governed by the [Anthropic Commercial Terms](https://www.anthropic.com/legal/commercial-terms) which includes the following:

|

||||

When using the Zed Agent Panel, whether through Zed's hosted AI service or via connecting a non-Zed AI service via API key, Zed does not persistently store user content or use user content to evaluate and/or improve our AI features, unless it is explicitly shared with Zed. Each share is opt-in, and sharing once will not cause future content or data to be shared again.

|

||||

|

||||

> Note that rating responses will send your data related to that response to Zed's servers.

|

||||

> **_If you don't want data persisted on Zed's servers, don't rate_**. We will not collect data for improving our Agentic offering without you explicitly rating responses.

|

||||

|

||||

When using upstream services through Zed AI, we require assurances from our service providers that your user content won't be used for training models. For example, usage of Anthropic Claude 3.5 via Zed AI in the Assistant is governed by the [Anthropic Commercial Terms](https://www.anthropic.com/legal/commercial-terms) which includes the following:

|

||||

|

||||

> "Anthropic may not train models on Customer Content from paid Services."

|

||||

|

||||

When you directly connect the Zed Assistant with a non Zed AI service (e.g. via API key) Zed does not have access to your user content. Users should reference their agreement with the service provider to understand what terms and conditions apply.

|

||||

When you directly connect the Zed Assistant with a non Zed AI service (e.g. via API key) Zed does not have control over how your data is used by that service provider. You should reference your agreement with each service provider to understand what terms and conditions apply.

|

||||

|

||||

## Zed Edit Predictions

|

||||

### Data we collect

|

||||

|

||||

For prompts you have explicitly shared with us, Zed may store copies of those prompts and other data about the specific use of the Agent Panel.

|

||||

|

||||

This data includes:

|

||||

|

||||

- The prompt given to the Agent

|

||||

- Any commentary you include

|

||||

- Product telemetry about the agentic thread

|

||||

- Metadata about your Zed installation

|

||||

|

||||

### Data Handling

|

||||

|

||||

Collected data is stored in Snowflake, a private database where we track other metrics. We periodically review this data to improve our overall agentic approach and refine the product via our system prompt, tool use, etc. We ensure any included data is anonymized and contains no sensitive information (access tokens, user IDs, email addresses, etc).

|

||||

|

||||

## Edit Predictions

|

||||

|

||||

By default, when using Zed Edit Predictions, Zed does not persistently store user content or use user content for training of its models.

|

||||

|

||||

35

docs/src/ai/ai.md

Normal file

35

docs/src/ai/ai.md

Normal file

@ -0,0 +1,35 @@

|

||||

# AI

|

||||

|

||||

Zed offers various features that integrate LLMs smoothly into the editor.

|

||||

|

||||

## Setting up AI in Zed

|

||||

|

||||

- [Models](./models.md): Information about the various language models available in Zed.

|

||||

|

||||

- [Configuration](./configuration.md): Configure the Agent, and set up different language model providers like Anthropic, OpenAI, Ollama, Google AI, and more.

|

||||

|

||||

- [Custom API Keys](./custom-api-keys.md): How to use your own API keys with the AI features.

|

||||

|

||||

- [Subscription](./subscription.md): Information about Zed's subscriptions and other billing related information.

|

||||

|

||||

- [Privacy and Security](./privacy-and-security.md): Understand how Zed handles privacy and security with AI features.

|

||||

|

||||

## Agentic Editing

|

||||

|

||||

- [Agent Panel](./agent-panel.md): Create and collaboratively edit new threads, and manage interactions with language models.

|

||||

|

||||

- [Rules](./rules.md): How to define rules for AI interactions.

|

||||

|

||||

- [Tools](./tools.md): Explore the tools that enhance the AI's capabilities to interact with your codebase.

|

||||

|

||||

- [Model Context Protocol](./mcp.md): Learn about context servers that enhance the Assistant's capabilities.

|

||||

|

||||

- [Inline Assistant](./inline-assistant.md): Discover how to use the agent to power inline transformations directly within your code editor and terminal.

|

||||

|

||||

## Edit Prediction

|

||||

|

||||

- [Edit Prediction](./edit-prediction.md): Learn about Zed's Edit Prediction feature that helps autocomplete your code.

|

||||

|

||||

## Text Thread Interface

|

||||

|

||||

- [Text Threads](./text-threads.md): Learn about an alternative text-based interface for interacting with language models.

|

||||

27

docs/src/ai/billing.md

Normal file

27

docs/src/ai/billing.md

Normal file

@ -0,0 +1,27 @@

|

||||

# Billing

|

||||

|

||||

We use Stripe as our billing and payments provider. All Pro plans require payment via credit card.

|

||||

For invoice-based billing, a Business plan is required. Contact sales@zed.dev for more information.

|

||||

|

||||

## Settings {#settings}

|

||||

|

||||

You can access billing settings at [zed.dev/account](https://zed.dev/account).

|

||||

Clicking the button under Account Settings will navigate you to Stripe’s secure portal, where you can update all billing-related settings and configuration.

|

||||

|

||||

## Billing Cycles {#billing-cycles}

|

||||

|

||||

Zed is billed on a monthly basis based on the date you initially subscribe.

|

||||

|

||||

We’ll also bill in-month for additional prompts used beyond your plan’s prompt limit, if usage exceeds $20 before month end. See [usage-based pricing](./plans-and-usage.md#ubp) for more.

|

||||

|

||||

## Invoice History {#invoice-history}

|

||||

|

||||

You can access your invoice history by navigating to [zed.dev/account](https://zed.dev/account) and clicking [button].

|

||||

|

||||

From Stripe’s secure portal, you can download all current and historical invoices.

|

||||

|

||||

## Updating Billing Information {#updating-billing-info}

|

||||

|

||||

You can update your payment method, company name, address, and tax information through the billing portal.

|

||||

|

||||

Please note that changes to billing information will **only** affect future invoices — **we cannot modify historical invoices**.

|

||||

@ -1,24 +1,21 @@

|

||||

# Configuring the Assistant

|

||||

# Configuring Custom API Keys

|

||||

|

||||

Here's a bird's-eye view of all the configuration options available in Zed's Assistant:

|

||||

While Zed offers hosted versions of models through our various plans, we're always happy to support users wanting to supply their own API keys for LLM providers.

|

||||

|

||||

- Configure LLM Providers

|

||||

- [Zed AI (Configured by default when signed in)](#zed-ai)

|

||||

- [Anthropic](#anthropic)

|

||||

- [GitHub Copilot Chat](#github-copilot-chat)

|

||||

- [Google AI](#google-ai)

|

||||

- [Ollama](#ollama)

|

||||

- [OpenAI](#openai)

|

||||

- [DeepSeek](#deepseek)

|

||||

- [LM Studio](#lmstudio)

|

||||

- Advanced configuration options

|

||||

- [Configuring Endpoints](#custom-endpoint)

|

||||

- [Configuring Timeouts](#provider-timeout)

|

||||

- [Configuring Models](#default-model)

|

||||

- [Configuring Feature-specific Models](#feature-specific-models)

|

||||

- [Configuring Alternative Models for Inline Assists](#alternative-assists)

|

||||

- [Common Panel Settings](#common-panel-settings)

|

||||

- [General Configuration Example](#general-example)

|

||||

> Using your own API keys is **_free_** - you do not need to subscribe to a Zed plan to use our AI features with your own keys.

|

||||

|

||||

## Supported LLM Providers

|

||||

|

||||

| Provider | Tool Use Supported |

|

||||

| ----------------------------------------------- | ------------------ |

|

||||

| [Anthropic](#anthropic) | ✅ |

|

||||

| [GitHub Copilot Chat](#github-copilot-chat) | In Some Cases |

|

||||

| [Google AI](#google-ai) | ✅ |

|

||||

| [Ollama](#ollama) | ✅ |

|

||||

| [OpenAI](#openai) | ✅ |

|

||||

| [DeepSeek](#deepseek) | 🚫 |

|

||||

| [OpenAI API Compatible](#openai-api-compatible) | 🚫 |

|

||||

| [LM Studio](#lmstudio) | 🚫 |

|

||||

|

||||

## Providers {#providers}

|

||||

|

||||

@ -26,13 +23,11 @@ To access the Assistant configuration view, run `assistant: show configuration`

|

||||

|

||||

Below you can find all the supported providers available so far.

|

||||

|

||||

### Zed AI {#zed-ai}

|

||||

|

||||

A hosted service providing convenient and performant support for AI-enabled coding in Zed, powered by Anthropic's Claude 3.5 Sonnet and accessible just by signing in.

|

||||

|

||||

### Anthropic {#anthropic}

|

||||

|

||||

You can use Claude 3.5 Sonnet via [Zed AI](#zed-ai) for free. To use other Anthropic models you will need to configure it by providing your own API key.

|

||||

> 🔨Supports tool use

|

||||

|

||||

You can use Anthropic models with the Zed assistant by choosing it via the model dropdown in the assistant panel.

|

||||

|

||||

1. Sign up for Anthropic and [create an API key](https://console.anthropic.com/settings/keys)

|

||||

2. Make sure that your Anthropic account has credits

|

||||

@ -89,10 +84,15 @@ by changing the mode in of your models configuration to `thinking`, for example:

|

||||

|

||||

### GitHub Copilot Chat {#github-copilot-chat}

|

||||

|

||||

> 🔨Supports tool use in some cases.

|

||||

> See [here](https://github.com/zed-industries/zed/blob/9e0330ba7d848755c9734bf456c716bddf0973f3/crates/language_models/src/provider/copilot_chat.rs#L189-L198) for the supported subset

|

||||

|

||||

You can use GitHub Copilot chat with the Zed assistant by choosing it via the model dropdown in the assistant panel.

|

||||

|

||||

### Google AI {#google-ai}

|

||||

|

||||

> 🔨Supports tool use

|

||||

|

||||

You can use Gemini 1.5 Pro/Flash with the Zed assistant by choosing it via the model dropdown in the assistant panel.

|

||||

|

||||

1. Go the Google AI Studio site and [create an API key](https://aistudio.google.com/app/apikey).

|

||||

@ -127,6 +127,8 @@ Custom models will be listed in the model dropdown in the assistant panel.

|

||||

|

||||

### Ollama {#ollama}

|

||||

|

||||

> 🔨Supports tool use

|

||||

|

||||

Download and install Ollama from [ollama.com/download](https://ollama.com/download) (Linux or macOS) and ensure it's running with `ollama --version`.

|

||||

|

||||

1. Download one of the [available models](https://ollama.com/models), for example, for `mistral`:

|

||||

@ -174,6 +176,8 @@ You may also optionally specify a value for `keep_alive` for each available mode

|

||||

|

||||

### OpenAI {#openai}

|

||||

|

||||

> 🔨Supports tool use

|

||||

|

||||

1. Visit the OpenAI platform and [create an API key](https://platform.openai.com/account/api-keys)

|

||||

2. Make sure that your OpenAI account has credits

|

||||

3. Open the configuration view (`assistant: show configuration`) and navigate to the OpenAI section

|

||||

@ -214,6 +218,8 @@ You must provide the model's Context Window in the `max_tokens` parameter, this

|

||||

|

||||

### DeepSeek {#deepseek}

|

||||

|

||||

> 🚫 Does not support tool use 🚫

|

||||

|

||||

1. Visit the DeepSeek platform and [create an API key](https://platform.deepseek.com/api_keys)

|

||||

2. Open the configuration view (`assistant: show configuration`) and navigate to the DeepSeek section

|

||||

3. Enter your DeepSeek API key

|

||||

@ -251,7 +257,7 @@ The Zed Assistant comes pre-configured to use the latest version for common mode

|

||||

|

||||

Custom models will be listed in the model dropdown in the assistant panel. You can also modify the `api_url` to use a custom endpoint if needed.

|

||||

|

||||

### OpenAI API Compatible

|

||||

### OpenAI API Compatible{#openai-api-compatible}

|

||||

|

||||

Zed supports using OpenAI compatible APIs by specifying a custom `endpoint` and `available_models` for the OpenAI provider.

|

||||

|

||||

@ -277,6 +283,8 @@ Example configuration for using X.ai Grok with Zed:

|

||||

|

||||

### LM Studio {#lmstudio}

|

||||

|

||||

> 🚫 Does not support tool use 🚫

|

||||

|

||||

1. Download and install the latest version of LM Studio from https://lmstudio.ai/download

|

||||

2. In the app press ⌘/Ctrl + Shift + M and download at least one model, e.g. qwen2.5-coder-7b

|

||||

|

||||

@ -293,150 +301,3 @@ Example configuration for using X.ai Grok with Zed:

|

||||

```

|

||||

|

||||

Tip: Set [LM Studio as a login item](https://lmstudio.ai/docs/advanced/headless#run-the-llm-service-on-machine-login) to automate running the LM Studio server.

|

||||

|

||||

## Advanced Configuration {#advanced-configuration}

|

||||

|

||||

### Custom Endpoints {#custom-endpoint}

|

||||

|

||||

You can use a custom API endpoint for different providers, as long as it's compatible with the providers API structure.

|

||||

|

||||

To do so, add the following to your Zed `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"language_models": {

|

||||

"some-provider": {

|

||||

"api_url": "http://localhost:11434"

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Where `some-provider` can be any of the following values: `anthropic`, `google`, `ollama`, `openai`.

|

||||

|

||||

### Configuring Models {#default-model}

|

||||

|

||||

Zed's hosted LLM service sets `claude-3-7-sonnet-latest` as the default model.

|

||||

However, you can change it either via the model dropdown in the Assistant Panel's bottom-left corner or by manually editing the `default_model` object in your settings:

|

||||

|

||||

```json

|

||||

{

|

||||

"assistant": {

|

||||

"version": "2",

|

||||

"default_model": {

|

||||

"provider": "zed.dev",

|

||||

"model": "gpt-4o"

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

#### Feature-specific Models {#feature-specific-models}

|

||||

|

||||

> Currently only available in [Preview](https://zed.dev/releases/preview).

|

||||

|

||||

Zed allows you to configure different models for specific features.

|

||||

This provides flexibility to use more powerful models for certain tasks while using faster or more efficient models for others.

|

||||

|

||||

If a feature-specific model is not set, it will fall back to using the default model, which is the one you set on the Agent Panel.

|

||||

|

||||

You can configure the following feature-specific models:

|

||||

|

||||

- Thread summary model: Used for generating thread summaries

|

||||

- Inline assistant model: Used for the inline assistant feature

|

||||

- Commit message model: Used for generating Git commit messages

|

||||

|

||||

Example configuration:

|

||||

|

||||

```json

|

||||

{

|

||||

"assistant": {

|

||||

"version": "2",

|

||||

"default_model": {

|

||||

"provider": "zed.dev",

|

||||

"model": "claude-3-7-sonnet"

|

||||

},

|

||||

"inline_assistant_model": {

|

||||

"provider": "anthropic",

|

||||

"model": "claude-3-5-sonnet"

|

||||

},

|

||||

"commit_message_model": {

|

||||

"provider": "openai",

|

||||

"model": "gpt-4o-mini"

|

||||

},

|

||||

"thread_summary_model": {

|

||||

"provider": "google",

|

||||

"model": "gemini-2.0-flash"

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Configuring Alternative Models for Inline Assists {#alternative-assists}

|

||||

|

||||

You can configure additional models that will be used to perform inline assists in parallel. When you do this,

|

||||

the inline assist UI will surface controls to cycle between the alternatives generated by each model. The models

|

||||

you specify here are always used in _addition_ to your default model. For example, the following configuration

|

||||

will generate two outputs for every assist. One with Claude 3.5 Sonnet, and one with GPT-4o.

|

||||

|

||||

```json

|

||||

{

|

||||

"assistant": {

|

||||

"default_model": {

|

||||

"provider": "zed.dev",

|

||||

"model": "claude-3-5-sonnet"

|

||||

},

|

||||

"inline_alternatives": [

|

||||

{

|

||||

"provider": "zed.dev",

|

||||

"model": "gpt-4o"

|

||||

}

|

||||

],

|

||||

"version": "2"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Common Panel Settings {#common-panel-settings}

|

||||

|

||||

| key | type | default | description |

|

||||

| -------------- | ------- | ------- | ------------------------------------------------------------------------------------- |

|

||||

| enabled | boolean | true | Setting this to `false` will completely disable the assistant |

|

||||

| button | boolean | true | Show the assistant icon in the status bar |

|

||||

| dock | string | "right" | The default dock position for the assistant panel. Can be ["left", "right", "bottom"] |

|

||||

| default_height | string | null | The pixel height of the assistant panel when docked to the bottom |

|

||||

| default_width | string | null | The pixel width of the assistant panel when docked to the left or right |

|

||||

|

||||

## General Configuration Example {#general-example}

|

||||

|

||||

```json

|

||||

{

|

||||

"assistant": {

|

||||

"enabled": true,

|

||||

"default_model": {

|

||||

"provider": "zed.dev",

|

||||

"model": "claude-3-7-sonnet"

|

||||

},

|

||||

"editor_model": {

|

||||

"provider": "openai",

|

||||

"model": "gpt-4o"

|

||||

},

|

||||

"inline_assistant_model": {

|

||||

"provider": "anthropic",

|

||||

"model": "claude-3-5-sonnet"

|

||||

},

|

||||

"commit_message_model": {

|

||||

"provider": "openai",

|

||||

"model": "gpt-4o-mini"

|

||||

},

|

||||

"thread_summary_model": {

|

||||

"provider": "google",

|

||||

"model": "gemini-1.5-flash"

|

||||

},

|

||||

"version": "2",

|

||||

"button": true,

|

||||

"default_width": 480,

|

||||

"dock": "right"

|

||||

}

|

||||

}

|

||||

```

|

||||

279

docs/src/ai/edit-prediction.md

Normal file

279

docs/src/ai/edit-prediction.md

Normal file

@ -0,0 +1,279 @@

|

||||

# Edit Prediction

|

||||

|

||||

Edit Prediction is Zed's native mechanism for predicting the code you want to write through AI.

|

||||

Each keystroke sends a new request to our [open source, open dataset Zeta model](https://huggingface.co/zed-industries/zeta) and it returns with individual or multi-line suggestions that can be quickly accepted by pressing `tab`.

|

||||

|

||||

## Configuring Zeta

|

||||

|

||||

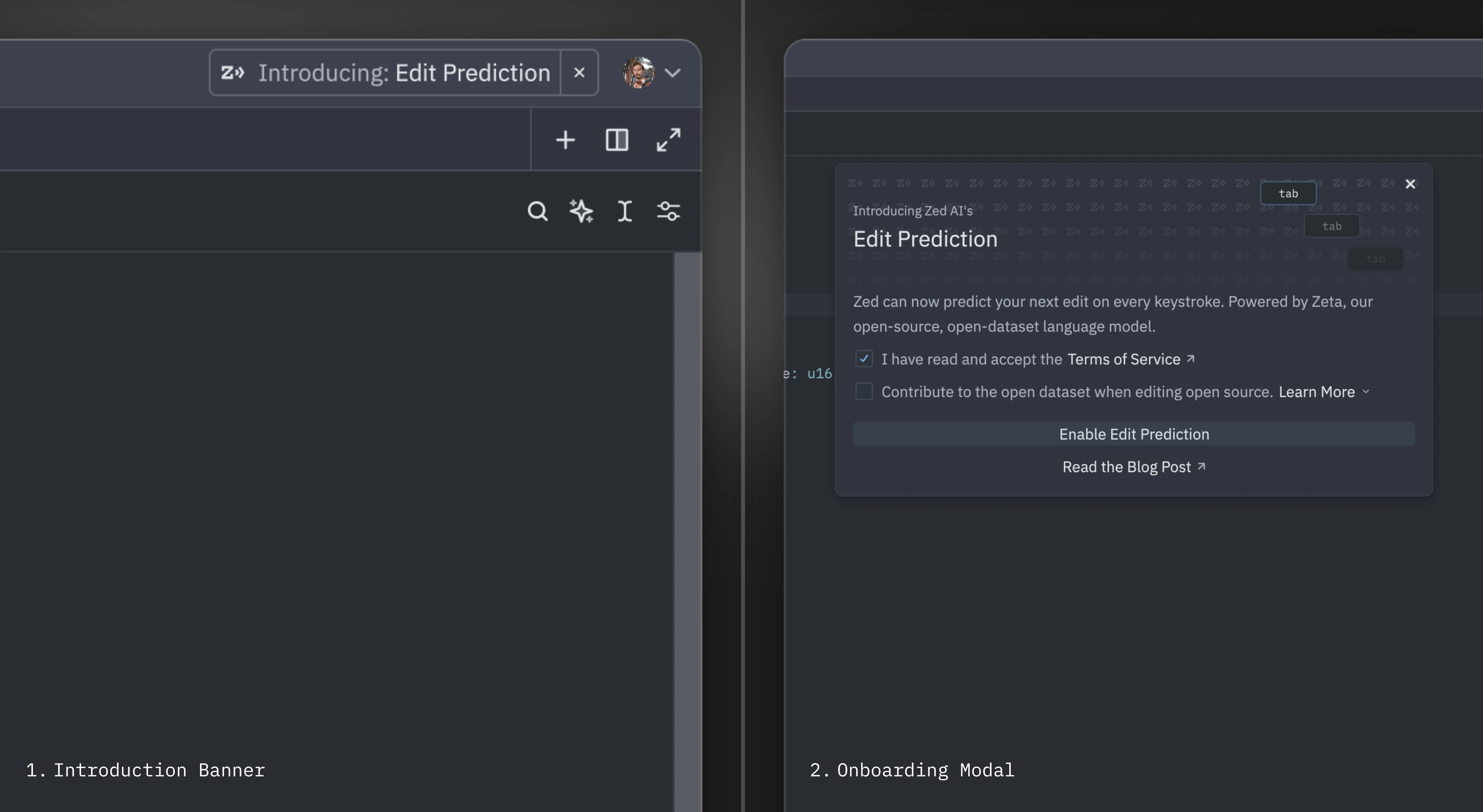

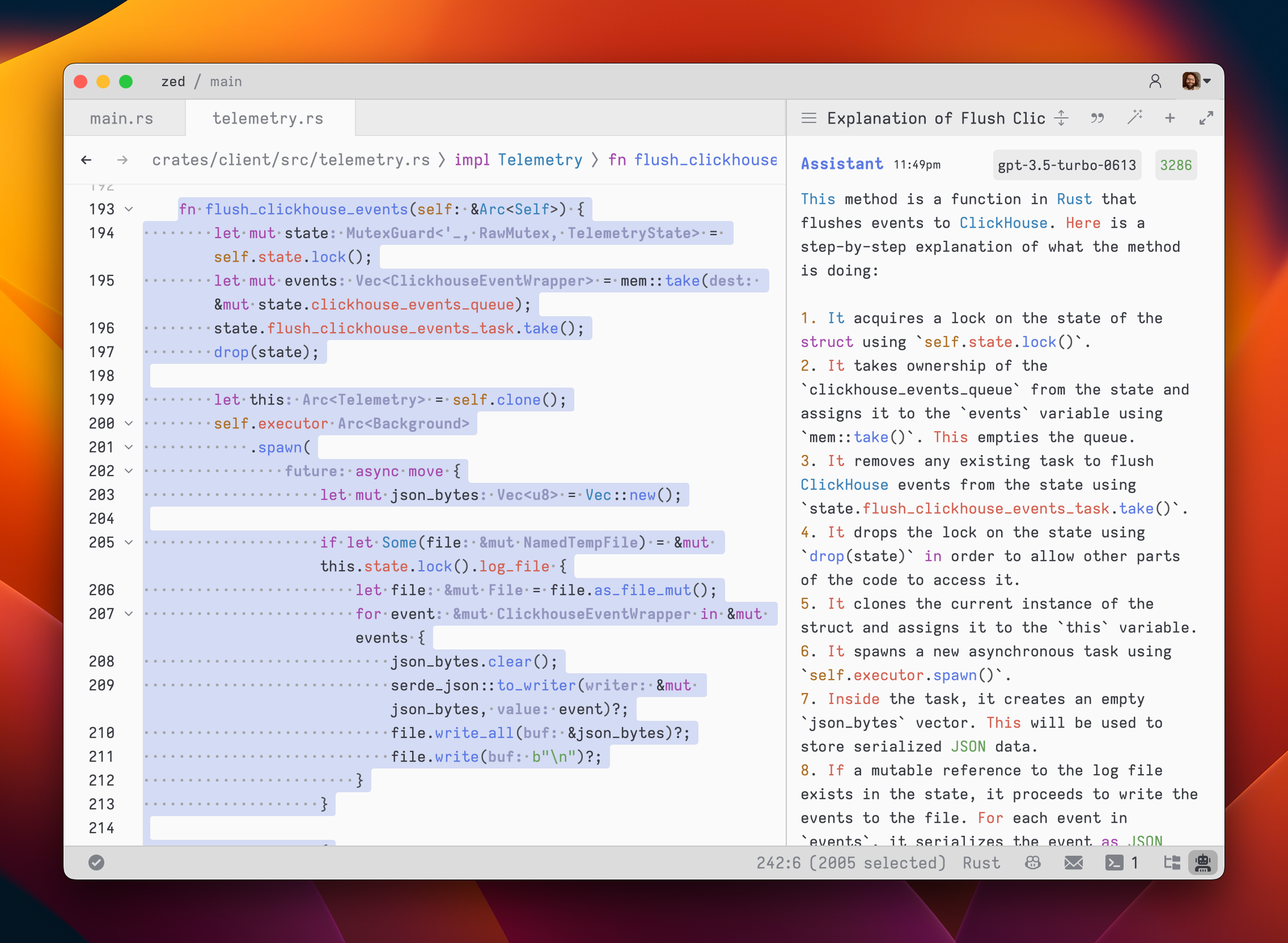

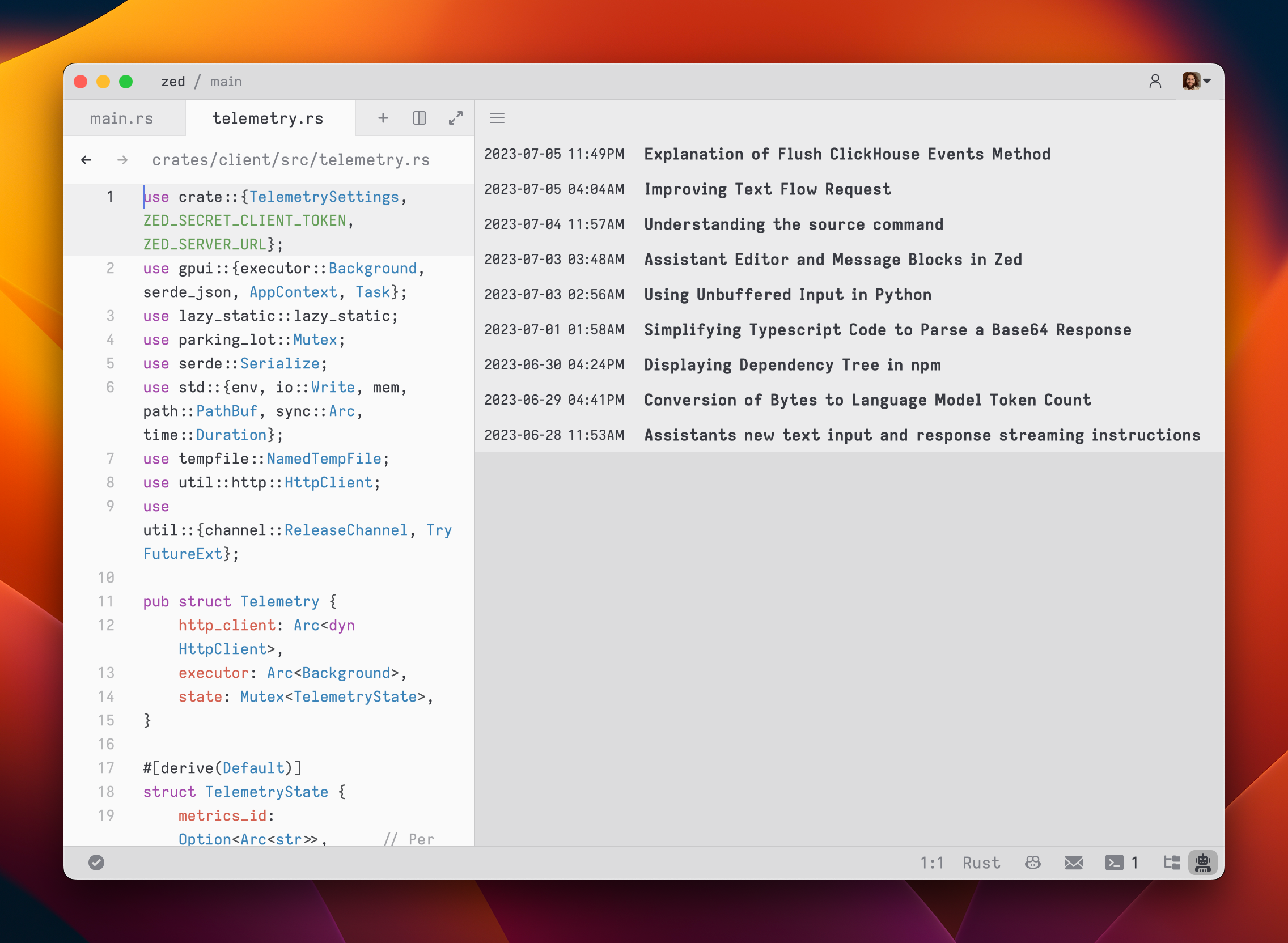

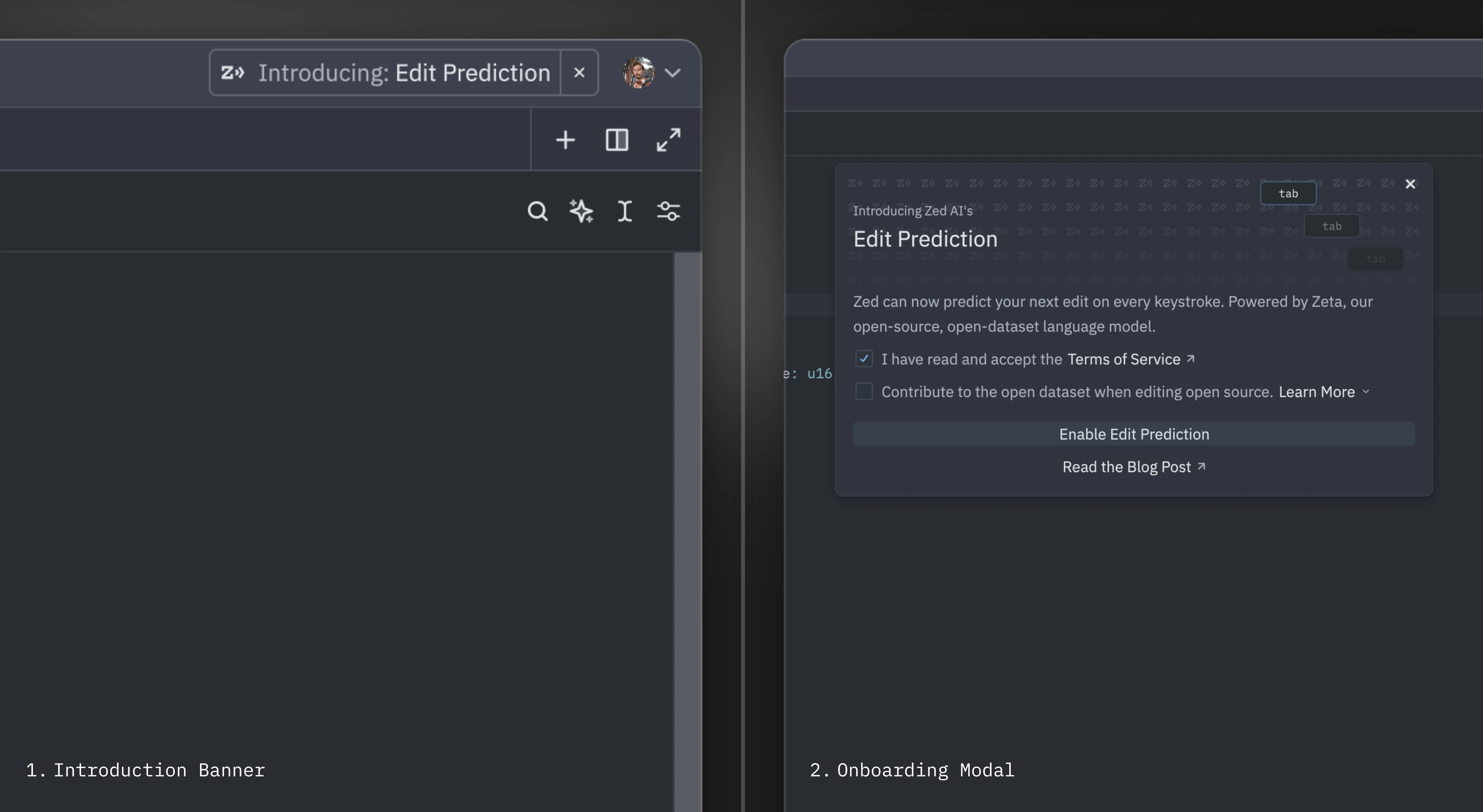

Zed's Edit Prediction was initially introduced via a banner on the title bar.

|

||||

Clicking on it would take you to a modal with a button ("Enable Edit Prediction") that sets `zed` as your `edit_prediction_provider`.

|

||||

|

||||

|

||||

|

||||

But, if you haven't come across the banner, Zed's Edit Prediction is the default edit prediction provider and you should see it right away in your status bar.

|

||||

|

||||

### Switching Modes {#switching-modes}

|

||||

|

||||

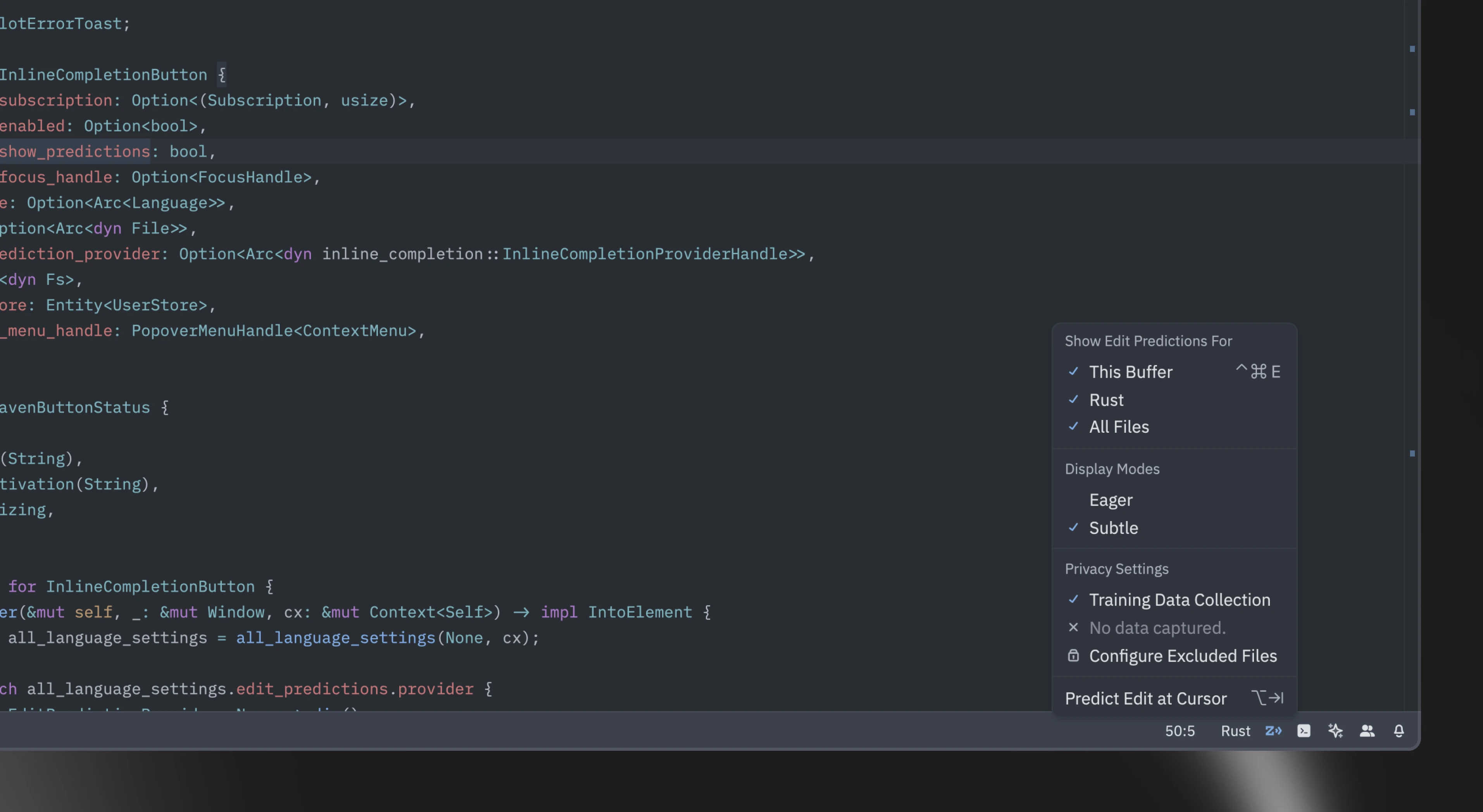

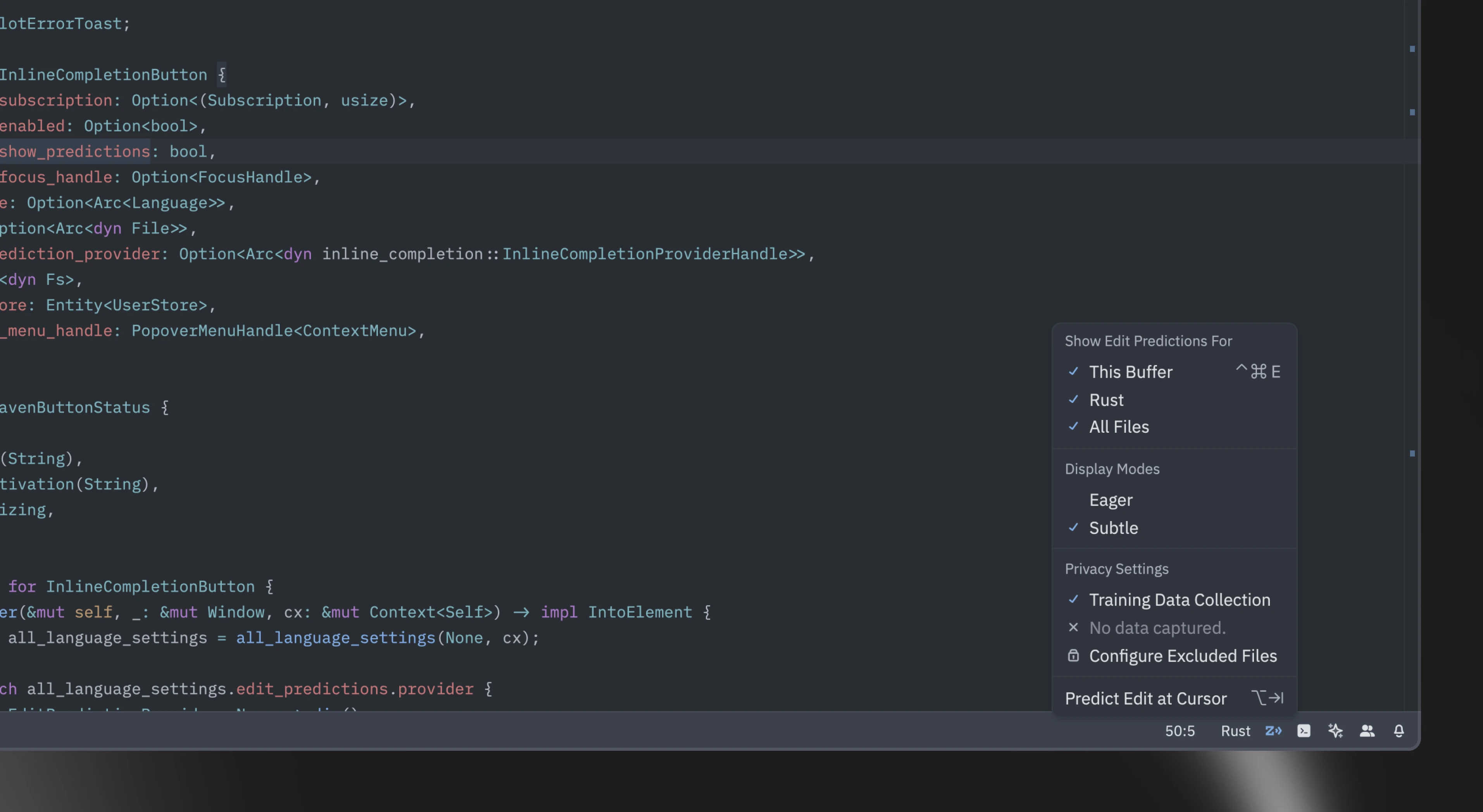

Zed's Edit Prediction comes with two different display modes:

|

||||

|

||||

1. `eager` (default): predictions are displayed inline as long as it doesn't conflict with language server completions

|

||||

2. `subtle`: predictions only appear inline when holding a modifier key (`alt` by default)

|

||||

|

||||

Toggle between them via the `mode` key:

|

||||

|

||||

```json

|

||||

"edit_predictions": {

|

||||

"mode": "eager" | "subtle"

|

||||

},

|

||||

```

|

||||

|

||||

Or directly via the UI through the status bar menu:

|

||||

|

||||

|

||||

|

||||

### Conflict With Other `tab` Actions {#edit-predictions-conflict}

|

||||

|

||||

By default, when `tab` would normally perform a different action, Zed requires a modifier key to accept predictions:

|

||||

|

||||

1. When the language server completions menu is visible.

|

||||

2. When your cursor isn't at the right indentation level.

|

||||

|

||||

In these cases, `alt-tab` is used instead to accept the prediction. When the language server completions menu is open, holding `alt` first will cause it to temporarily disappear in order to preview the prediction within the buffer.

|

||||

|

||||

On Linux, `alt-tab` is often used by the window manager for switching windows, so `alt-l` is provided as the default binding for accepting predictions. `tab` and `alt-tab` also work, but aren't displayed by default.

|

||||

|

||||

{#action editor::AcceptPartialEditPrediction} ({#kb editor::AcceptPartialEditPrediction}) can be used to accept the current edit prediction up to the next word boundary.

|

||||

|

||||

See the [Configuring GitHub Copilot](#github-copilot) and [Configuring Supermaven](#supermaven) sections below for configuration of other providers. Only text insertions at the current cursor are supported for these providers, whereas the Zeta model provides multiple predictions including deletions.

|

||||

|

||||

## Configuring Edit Prediction Keybindings {#edit-predictions-keybinding}

|

||||

|

||||

By default, `tab` is used to accept edit predictions. You can use another keybinding by inserting this in your keymap:

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction",

|

||||

"bindings": {

|

||||

// Here we also allow `alt-enter` to accept the prediction

|

||||

"alt-enter": "editor::AcceptEditPrediction"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

When there's a [conflict with the `tab` key](#edit-predictions-conflict), Zed uses a different context to accept keybindings (`edit_prediction_conflict`). If you want to use a different one, you can insert this in your keymap:

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction_conflict",

|

||||

"bindings": {

|

||||

"ctrl-enter": "editor::AcceptEditPrediction" // Example of a modified keybinding

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

If your keybinding contains a modifier (`ctrl` in the example above), it will also be used to preview the edit prediction and temporarily hide the language server completion menu.

|

||||

|

||||

You can also bind this action to keybind without a modifier. In that case, Zed will use the default modifier (`alt`) to preview the edit prediction.

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction_conflict",

|

||||

"bindings": {

|

||||

// Here we bind tab to accept even when there's a language server completion

|

||||

// or the cursor isn't at the correct indentation level

|

||||

"tab": "editor::AcceptEditPrediction"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

To maintain the use of the modifier key for accepting predictions when there is a language server completions menu, but allow `tab` to accept predictions regardless of cursor position, you can specify the context further with `showing_completions`:

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction_conflict && !showing_completions",

|

||||

"bindings": {

|

||||

// Here we don't require a modifier unless there's a language server completion

|

||||

"tab": "editor::AcceptEditPrediction"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Keybinding Example: Always Use Alt-Tab

|

||||

|

||||

The keybinding example below causes `alt-tab` to always be used instead of sometimes using `tab`. You might want this in order to have just one keybinding to use for accepting edit predictions, since the behavior of `tab` varies based on context.

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction",

|

||||

"bindings": {

|

||||

"alt-tab": "editor::AcceptEditPrediction"

|

||||

}

|

||||

},

|

||||

// Bind `tab` back to its original behavior.

|

||||

{

|

||||

"context": "Editor",

|

||||

"bindings": {

|

||||

"tab": "editor::Tab"

|

||||

}

|

||||

},

|

||||

{

|

||||

"context": "Editor && showing_completions",

|

||||

"bindings": {

|

||||

"tab": "editor::ComposeCompletion"

|

||||

}

|

||||

},

|

||||

```

|

||||

|

||||

If `"vim_mode": true` is set within `settings.json`, then additional bindings are needed after the above to return `tab` to its original behavior:

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "(VimControl && !menu) || vim_mode == replace || vim_mode == waiting",

|

||||

"bindings": {

|

||||

"tab": "vim::Tab"

|

||||

}

|

||||

},

|

||||

{

|

||||

"context": "vim_mode == literal",

|

||||

"bindings": {

|

||||

"tab": ["vim::Literal", ["tab", "\u0009"]]

|

||||

}

|

||||

},

|

||||

```

|

||||

|

||||

### Keybinding Example: Displaying Tab and Alt-Tab on Linux

|

||||

|

||||

While `tab` and `alt-tab` are supported on Linux, `alt-l` is displayed instead. If your window manager does not reserve `alt-tab`, and you would prefer to use `tab` and `alt-tab`, include these bindings in `keymap.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"context": "Editor && edit_prediction",

|

||||

"bindings": {

|

||||

"tab": "editor::AcceptEditPrediction",

|

||||

// Optional: This makes the default `alt-l` binding do nothing.

|

||||

"alt-l": null

|

||||

}

|

||||

},

|

||||

{

|

||||

"context": "Editor && edit_prediction_conflict",

|

||||

"bindings": {

|

||||

"alt-tab": "editor::AcceptEditPrediction",

|

||||

// Optional: This makes the default `alt-l` binding do nothing.

|

||||

"alt-l": null

|

||||

}

|

||||

},

|

||||

```

|

||||

|

||||

### Missing keybind {#edit-predictions-missing-keybinding}

|

||||

|

||||

Zed requires at least one keybinding for the {#action editor::AcceptEditPrediction} action in both the `Editor && edit_prediction` and `Editor && edit_prediction_conflict` contexts ([learn more above](#edit-predictions-keybinding)).

|

||||

|

||||

If you have previously bound the default keybindings to different actions in the global context, you will not be able to preview or accept edit predictions. For example:

|

||||

|

||||

```json

|

||||

[

|

||||

// Your keymap

|

||||

{

|

||||

"bindings": {

|

||||

// Binds `alt-tab` to a different action globally

|

||||

"alt-tab": "menu::SelectNext"

|

||||

}

|

||||

}

|

||||

]

|

||||

```

|

||||

|

||||

To fix this, you can specify your own keybinding for accepting edit predictions:

|

||||

|

||||

```json

|

||||

[

|

||||

// ...

|

||||

{

|

||||

"context": "Editor && edit_prediction_conflict",

|

||||

"bindings": {

|

||||

"alt-l": "editor::AcceptEditPrediction"

|

||||

}

|

||||

}

|

||||

]

|

||||

```

|

||||

|

||||

If you would like to use the default keybinding, you can free it up by either moving yours to a more specific context or changing it to something else.

|

||||

|

||||

## Disabling Automatic Edit Prediction

|

||||

|

||||

There are different levels in which you can disable edit predictions to be displayed, including not having it turned on at all.

|

||||

|

||||

Alternatively, if you have Zed set as your provider, consider [using Subtle Mode](#switching-modes).

|

||||

|

||||

### On Buffers

|

||||

|

||||

To not have predictions appear automatically as you type, set this within `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"show_edit_predictions": false

|

||||

}

|

||||

```

|

||||

|

||||

This hides every indication that there is a prediction available, regardless of [the display mode](#switching-modes) you're in (valid only if you have Zed as your provider).

|

||||

Still, you can trigger edit predictions manually by executing {#action editor::ShowEditPrediction} or hitting {#kb editor::ShowEditPrediction}.

|

||||

|

||||

### For Specific Languages

|

||||

|

||||

To not have predictions appear automatically as you type when working with a specific language, set this within `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"language": {

|

||||

"python": {

|

||||

"show_edit_predictions": false

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### Turning Off Completely

|

||||

|

||||

To completely turn off edit prediction across all providers, explicitly set the settings to `none`, like so:

|

||||

|

||||

```json

|

||||

"features": {

|

||||

"edit_prediction_provider": "none"

|

||||

},

|

||||

```

|

||||

|

||||

## Configuring GitHub Copilot {#github-copilot}

|

||||

|

||||

To use GitHub Copilot as your provider, set this within `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"features": {

|

||||

"edit_prediction_provider": "copilot"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

You should be able to sign-in to GitHub Copilot by clicking on the Copilot icon in the status bar and following the setup instructions.

|

||||

|

||||

Copilot can provide multiple completion alternatives, and these can be navigated with the following actions:

|

||||

|

||||

- {#action editor::NextEditPrediction} ({#kb editor::NextEditPrediction}): To cycle to the next edit prediction

|

||||

- {#action editor::PreviousEditPrediction} ({#kb editor::PreviousEditPrediction}): To cycle to the previous edit prediction

|

||||

|

||||

## Configuring Supermaven {#supermaven}

|

||||

|

||||

To use Supermaven as your provider, set this within `settings.json`:

|

||||

|

||||

```json

|

||||

{

|

||||

"features": {

|

||||

"edit_prediction_provider": "supermaven"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

You should be able to sign-in to Supermaven by clicking on the Supermaven icon in the status bar and following the setup instructions.

|

||||

|

||||

## See also

|

||||

|

||||

You may also use the [Agent Panel](./agent-panel.md) or the [Inline Assistant](./inline-assistant.md) to interact with language models, see the [AI documentation](./ai.md) for more information on the other AI features in Zed.

|

||||

35

docs/src/ai/inline-assistant.md

Normal file

35

docs/src/ai/inline-assistant.md

Normal file

@ -0,0 +1,35 @@

|

||||

# Inline Assistant

|

||||

|

||||

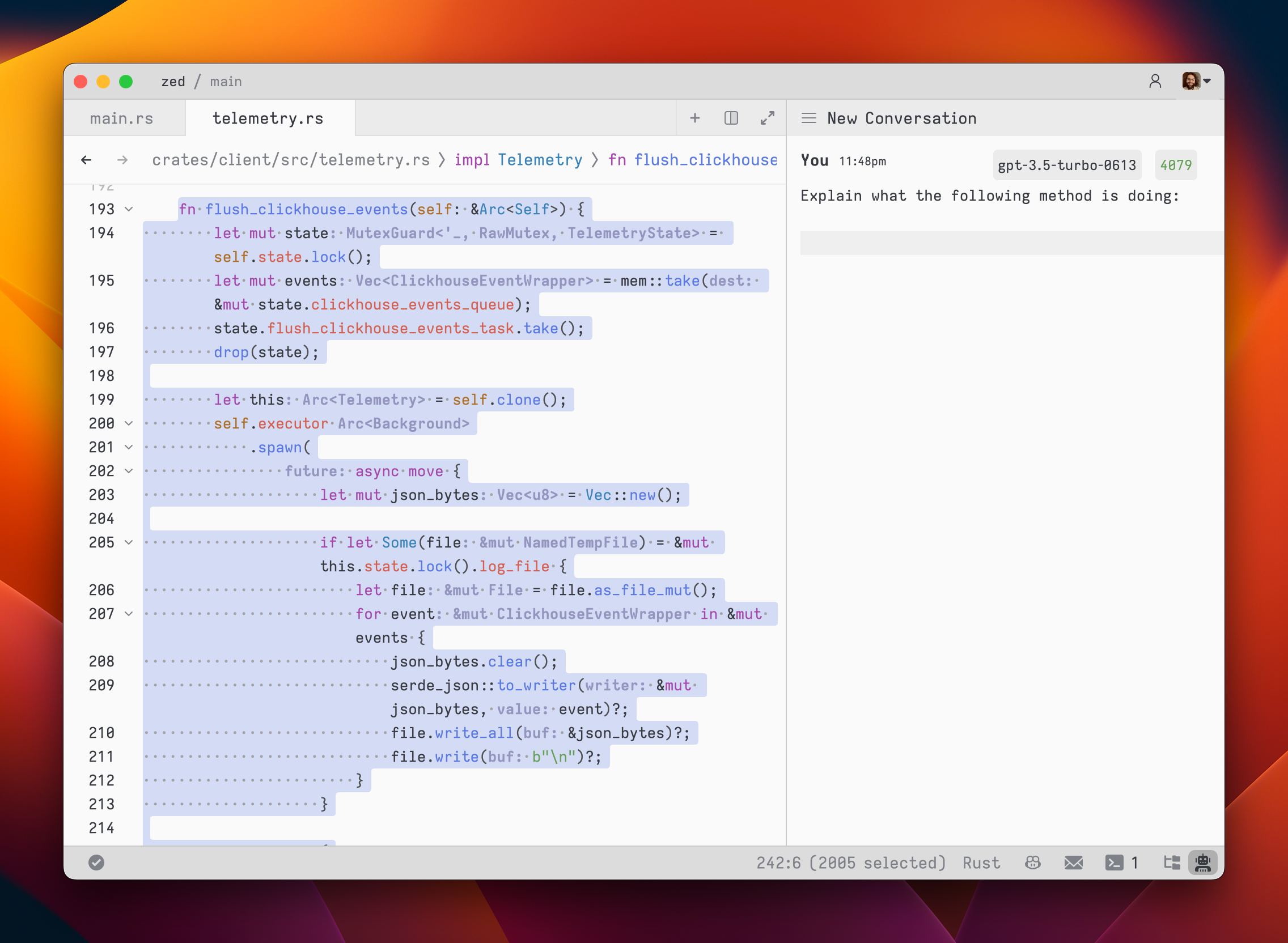

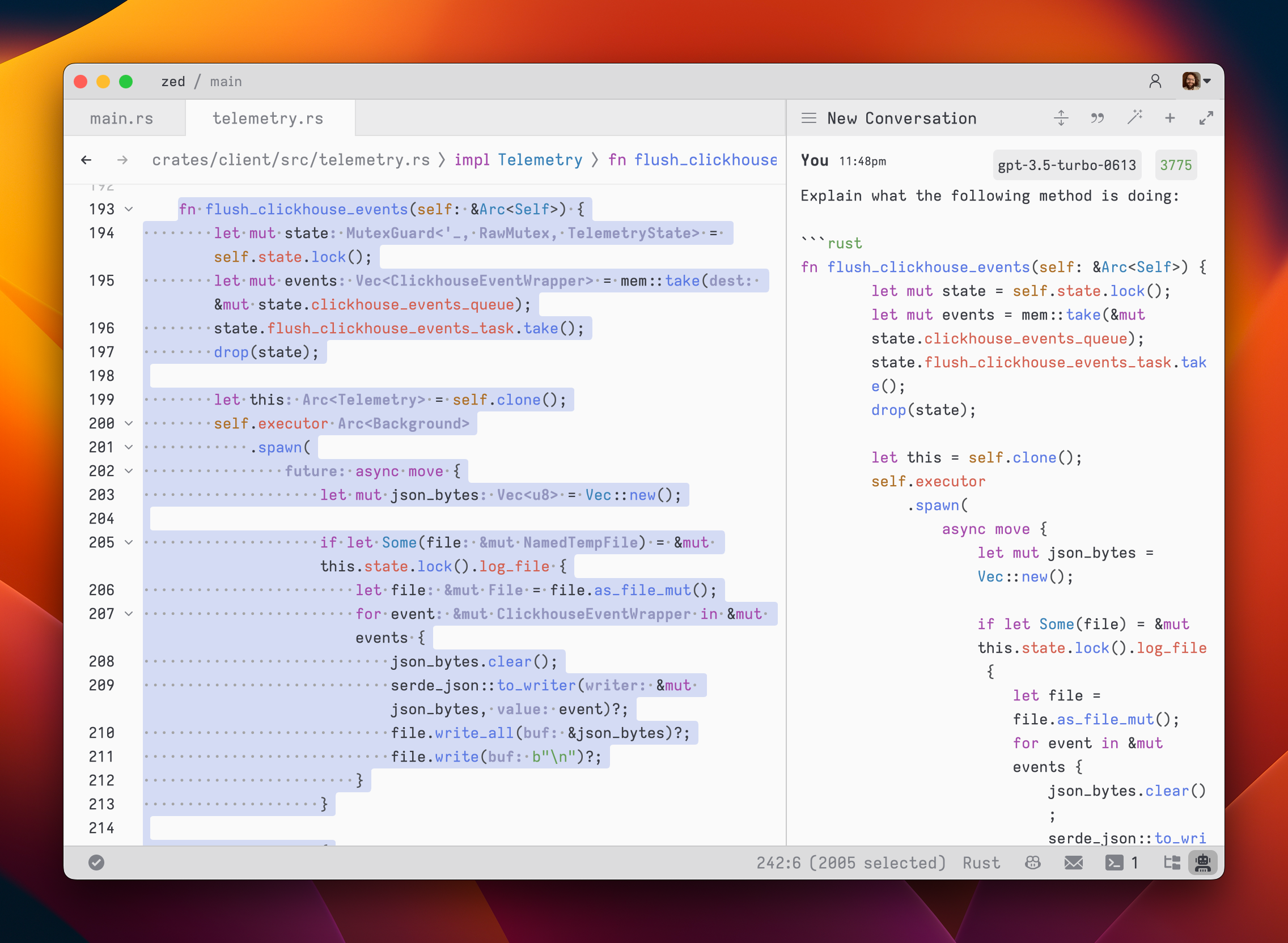

## Using the Inline Assistant

|

||||

|

||||

You can use `ctrl-enter` to open the Inline Assistant nearly anywhere you can enter text: editors, the agent panel, the prompt library, channel notes, and even within the terminal panel.

|

||||

|

||||

The Inline Assistant allows you to send the current selection (or the current line) to a language model and modify the selection with the language model's response.

|

||||

|

||||

You can use `ctrl-enter` to open the inline assistant nearly anywhere you can write text: editors, the Agent Panel, the Rules Library, channel notes, and even within the terminal panel.

|

||||

|

||||

You can also perform multiple generation requests in parallel by pressing `ctrl-enter` with multiple cursors, or by pressing `ctrl-enter` with a selection that spans multiple excerpts in a multibuffer.

|

||||

|

||||

## Context

|

||||

|

||||

You can give the Inline Assistant context the same way you can in the agent panel, allowing you to provide additional instructions or rules for code transformations with @-mentions.

|

||||

|

||||

A useful pattern here is to create a thread in the [Agent Panel](./agent-panel.md), and then use the `@thread` command in the Inline Assistant to include the thread as context for the Inline Assistant transformation.

|

||||

|

||||

## Prefilling Prompts

|

||||

|

||||

To create a custom keybinding that prefills a prompt, you can add the following format in your keymap:

|

||||

|

||||

```json

|

||||

[

|

||||

{

|

||||

"context": "Editor && mode == full",

|

||||

"bindings": {

|

||||

"ctrl-shift-enter": [

|

||||

"assistant::InlineAssist",

|

||||

{ "prompt": "Build a snake game" }

|

||||

]

|

||||

}

|

||||

}

|

||||

]

|

||||

```

|

||||

@ -1,6 +1,6 @@

|

||||

# Model Context Protocol

|

||||

|

||||

Zed uses the [Model Context Protocol](https://modelcontextprotocol.io/) to interact with [context servers](./context-servers.md):

|

||||

Zed uses the [Model Context Protocol](https://modelcontextprotocol.io/) to interact with context servers.

|

||||

|

||||

> The Model Context Protocol (MCP) is an open protocol that enables seamless integration between LLM applications and external data sources and tools. Whether you're building an AI-powered IDE, enhancing a chat interface, or creating custom AI workflows, MCP provides a standardized way to connect LLMs with the context they need.

|

||||

|

||||

39

docs/src/ai/models.md

Normal file

39

docs/src/ai/models.md

Normal file

@ -0,0 +1,39 @@

|

||||

# Models

|

||||

|

||||

Zed’s plans offer hosted versions of major LLM’s, generally with higher rate limits than individual API keys. We’re working hard to expand the models supported by Zed’s subscription offerings, so please check back often.

|

||||

|

||||

| Model | Provider | Max Mode | Context Window | Price per Prompt | Price per Request |

|

||||

| ----------------- | --------- | -------- | -------------- | ---------------- | ----------------- |

|

||||

| Claude 3.5 Sonnet | Anthropic | ❌ | 120k | $0.04 | N/A |

|

||||

| Claude 3.7 Sonnet | Anthropic | ❌ | 120k | $0.04 | N/A |

|

||||

| Claude 3.7 Sonnet | Anthropic | ✅ | 200k | N/A | $0.05 |

|

||||

|

||||

## Usage {#usage}

|

||||

|

||||

The models above can be used with the prompts included in your plan. For models not marked with [“Max Mode”](#max-mode), each prompt is counted against the monthly limit of your plan.

|

||||

|

||||

If you’ve exceeded your limit for the month, and are on a paid plan, you can enable usage-based pricing to continue using models for the rest of the month. See [Plans and Usage](./plans-and-usage.md) for more information.

|

||||

|

||||

Non-[Max Mode](#max-mode) will use up to 25 tool calls per one prompt. If your prompt extends beyond 25 tool calls, Zed will ask if you’d like to continue which will consume a second prompt. See [Max Mode](#max-mode) for more information on tool calls in [Max Mode](#max-mode).

|

||||

|

||||

## Max Mode {#max-mode}

|

||||

|

||||

In Max Mode, we enable models to use [large context windows](#context-windows), unlimited tool calls, and other capabilities for expanded reasoning, to allow an unfettered agentic experience. Because of the increased cost to Zed, each subsequent request beyond the initial user prompt in [Max Mode](#max-mode) models is counted as a prompt for metering. In addition, usage-based pricing per request is slightly more expensive for [Max Mode](#max-mode) models than usage-based pricing per prompt for regular models.

|

||||

|

||||

Note that the Agent Panel using a Max Mode model may consume a good bit of your monthly prompt capacity, if many tool calls are used. We encourage you to think through what model is best for your needs before leaving the Agent Panel to work.

|

||||

|

||||

By default, all Agent threads start in normal mode, however you can use the agent setting `preferred_completion_mode` to start new Agent threads in max mode.

|

||||

|

||||

## Context Windows {#context-windows}

|

||||

|

||||

A context window is the maximum span of text and code an LLM can consider at once, including both the input prompt and output generated by the model.

|

||||

|

||||

In [Max Mode](#max-mode), we increase context window size to allow models to have enhanced reasoning capabilities.

|

||||

|

||||

Each Agent thread in Zed maintains its own context window. The more prompts, attached files, and responses included in a session, the larger the context window grows.

|

||||

|

||||

For best results, it’s recommended you take a purpose-based approach to Agent thread management, starting a new thread for each unique task.

|

||||

|

||||

## Tool Calls {#tool-calls}

|

||||

|

||||

Models can use [tools](./tools.md) to interface with your code, search the web, and perform other useful functions. In [Max Mode](#max-mode), models can use an unlimited number of tools per prompt, with each tool call counting as a prompt for metering purposes. For non-Max Mode models, you'll need to interact with the model every 25 tool calls to continue, at which point a new prompt will be counted against your plan limit.

|

||||

39

docs/src/ai/plans-and-usage.md

Normal file

39

docs/src/ai/plans-and-usage.md

Normal file

@ -0,0 +1,39 @@

|

||||

# Plans and Usage

|

||||

|

||||

To view your current usage, you can visit your account at [zed.dev/account](https://zed.dev/account).

|

||||

You’ll also find usage meters in-product when you’re nearing the limit for your plan or trial.

|

||||

|

||||

## Available Plans {#plans}

|

||||

|

||||

For costs and more information on pricing, visit [Zed’s pricing page](https://zed.dev/pricing).

|

||||

|

||||

Please note that if you’re interested in just using Zed as the world’s fastest editor, with no AI or subscription features, you can always do so for free, without [authentication](../accounts.md).

|

||||

|

||||

## Usage {#usage}

|

||||

|

||||

- A `prompt` in Zed is an input from the user, initiated on pressing enter, composed of one or many `requests`. A `prompt` can be initiated from the Agent Panel, or via Inline Assist.

|

||||

- A `request` in Zed is a response to a `prompt`, plus any tool calls that are initiated as part of that response. There may be one `request` per `prompt`, or many.

|

||||

|

||||

Most models offered by Zed are metered per-prompt.

|

||||

Some models that use large context windows and unlimited tool calls ([“Max Mode”](./models.md#max-mode)) count each individual request within a prompt against your prompt limit, since the agentic work spawned by the prompt is expensive to support.

|

||||

|

||||

See [the Models page](./models.md) for a list of which subset of models are metered by request.

|

||||

|

||||

Plans come with a set amount of prompts included, with the number varying depending on the plan you’ve selected.

|

||||

|

||||

## Usage-Based Pricing {#ubp}

|

||||

|

||||

You may opt in to usage-based pricing for prompts that exceed what is included in your paid plan from [your account page](https://zed.dev/account).

|

||||

|

||||

Usage-based pricing is only available with a paid plan, and is exclusively opt-in.

|

||||

From the dashboard, you can toggle usage-based pricing for usage exceeding your paid plan.

|

||||

You can also configure a spend limit in USD.

|

||||

Once the spend limit is hit, we’ll stop any further usage until your prompt limit resets.

|

||||

|

||||

We will bill for additional prompts when you’ve made prompts totaling $20, or when your billing date occurs, whichever comes first.

|

||||

|

||||

Cost per request for each model can be found on [the models page](./models.md).

|

||||

|

||||

## Business Usage {#business-usage}

|

||||

|

||||

Email [sales@zed.dev](mailto:sales@zed.dev) with any questions on business plans, metering, and usage-based pricing.

|

||||

27

docs/src/ai/privacy-and-security.md

Normal file

27

docs/src/ai/privacy-and-security.md

Normal file

@ -0,0 +1,27 @@

|

||||

# Privacy and Security

|

||||

|

||||

## Philosophy

|

||||

|

||||

Zed aims to collect on the minimum data necessary to serve and improve our product.

|

||||

|

||||

We believe in opt-in data sharing as the default in building AI products, rather than opt-out, like most of our competitors. Privacy Mode is not a setting to be toggled, it's a default stance.

|

||||

|

||||

As an open-source product, we believe in maximal transparency, and invite you to examine our codebase. If you find issues, we encourage you to share them with us.

|

||||

|

||||

It is entirely possible to use Zed without sharing any data with us and without authenticating into the product. We're happy to always support this desired use pattern.

|

||||

|

||||

## Documentation

|

||||

|

||||

- [Telemetry](../telemetry.md): How Zed collects general telemetry data.

|

||||

|

||||

- [AI Improvement](./ai-improvement.md): Zed's opt-in-only approach to data collection for AI improvement, whether our Agentic offering or Edit Predictions.

|

||||

|

||||

- [Accounts](../accounts.md): When and why you'd need to authenticate into Zed, how to do so, and what scope we need from you.

|

||||

|

||||

## Legal Links

|

||||

|

||||

- [Terms of Service](https://zed.dev/terms-of-service)

|

||||

- [Terms of Use](https://zed.dev/terms)

|

||||

- [Privacy Policy](https://zed.dev/privacy-policy)

|

||||

- [Zed's Contributor License and Feedback Agreement](https://zed.dev/cla)

|

||||

- [Subprocessors](https://zed.dev/subprocessors)

|

||||

88

docs/src/ai/rules.md

Normal file

88

docs/src/ai/rules.md

Normal file

@ -0,0 +1,88 @@

|

||||

# Using Rules {#using-rules}

|

||||

|

||||

Rules are an essential part of interacting with AI assistants in Zed. They help guide the AI's responses and ensure you get the most relevant and useful information.

|

||||

|

||||

Every new chat will start with the [default rules](#default-rules), which can be customized and is where your model prompting will stored.

|

||||

|

||||

Remember that effective prompting is an iterative process. Experiment with different prompt structures and wordings to find what works best for your specific needs and the model you're using.

|

||||

|

||||

Here are some tips for creating effective rules:

|

||||

|

||||

1. Be specific: Clearly state what you want the AI to do or explain.

|

||||

2. Provide context: Include relevant information about your project or problem.

|

||||

3. Use examples: If applicable, provide examples to illustrate your request.

|

||||

4. Break down complex tasks: For multi-step problems, consider breaking them into smaller, more manageable rules.

|

||||

|

||||

## `.rules` files

|

||||

|

||||

Zed supports including `.rules` files at the top level of worktrees. Here, you can include project-level instructions you'd like to have included in all of your interactions with the agent panel. Other names for this file are also supported - the first file which matches in this list will be used: `.rules`, `.cursorrules`, `.windsurfrules`, `.clinerules`, `.github/copilot-instructions.md`, or `CLAUDE.md`.

|

||||

|

||||

Zed also supports creating rules (`Rules Library`) that can be included in any interaction with the agent panel.

|

||||

|

||||

## Rules Library {#rules-library}

|

||||

|

||||

The Rules Library is an interface for writing and managing rules. Like other text-driven UIs in Zed, it is a full editor with syntax highlighting, keyboard shortcuts, etc.

|

||||

|

||||

You can use the inline assistant right in the rules editor, allowing you to automate and rewrite rules.

|

||||

|

||||

### Opening the Rules Library

|

||||

|

||||

1. Open the agent panel.

|

||||

2. Click on the `Agent Menu` (`...`) in the top right corner.

|

||||

3. Select `Rules...` from the dropdown.

|

||||

|

||||

You can also use the `assistant: open rules library` command while in the agent panel.

|

||||

|

||||

### Managing Rules

|

||||

|

||||

Once a rules file is selected, you can edit it directly in the built-in editor. Its title can be changed from the editor title bar as well.

|

||||

|

||||

Rules can be duplicated, deleted, or added to the default rules using the buttons in the rules editor.

|

||||

|

||||

## Creating Rules {#creating-rules}

|

||||

|

||||

To create a rule file, simply open the `Rules Library` and click the `+` button. Rules files are stored locally and can be accessed from the library at any time.

|

||||

|

||||

Having a series of rules files specifically tailored to prompt engineering can also help you write consistent and effective rules.

|

||||

|

||||

The process of writing and refining prompts is commonly referred to as "prompt engineering."

|

||||

|

||||

More on rule engineering:

|

||||

|

||||

- [Anthropic: Prompt Engineering](https://docs.anthropic.com/en/docs/build-with-claude/prompt-engineering/overview)

|

||||

- [OpenAI: Prompt Engineering](https://platform.openai.com/docs/guides/prompt-engineering)

|

||||

|

||||

## Editing the Default Rules {#default-rules}

|

||||

|

||||

Zed allows you to customize the default rules used when interacting with LLMs. Or to be more precise, it uses a series of rules that are combined to form the default rules.

|

||||

|

||||

To edit rules, select `Rules...` from the `Agent Menu` icon (`...`) in the upper right hand corner or using the {#kb assistant::OpenRulesLibrary} keyboard shortcut.

|

||||

|

||||

A default set of rules might look something like:

|

||||

|

||||

```plaintext

|

||||

[-] Default

|

||||

[+] Today's date

|

||||

[+] You are an expert

|

||||

[+] Don't add comments

|

||||

```

|

||||

|

||||

Default rules are included in the context of new threads automatically.

|

||||

|

||||

Default rules will show at the top of the rules list, and will be included with every new conversation.

|

||||

|

||||

You can manually add other rules as context using the `@rule` command.

|

||||

|

||||

> **Note:** Remember, commands are only evaluated when the context is created, so a command like `@file` won't continuously update.

|

||||