+

+  +

+ [projector_config.pbtxt](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/tensorboard/plugins/projector/projector_config.proto)

+in the same directory as your checkpoint file.

+

+### Setup

+

+For in depth information on how to run TensorBoard and make sure you are

+logging all the necessary information, see

+[TensorBoard: Visualizing Learning](../get_started/summaries_and_tensorboard.md).

+

+To visualize your embeddings, there are 3 things you need to do:

+

+1) Setup a 2D tensor that holds your embedding(s).

+

+```python

+embedding_var = tf.get_variable(....)

+```

+

+2) Periodically save your model variables in a checkpoint in

+LOG_DIR.

+

+```python

+saver = tf.train.Saver()

+saver.save(session, os.path.join(LOG_DIR, "model.ckpt"), step)

+```

+

+3) (Optional) Associate metadata with your embedding.

+

+If you have any metadata (labels, images) associated with your embedding, you

+can tell TensorBoard about it either by directly storing a

+[projector_config.pbtxt](https://github.com/tensorflow/tensorflow/blob/master/tensorflow/tensorboard/plugins/projector/projector_config.proto)

+in the LOG_DIR, or use our python API.

+

+For instance, the following projector_config.ptxt associates the

+word_embedding tensor with metadata stored in $LOG_DIR/metadata.tsv:

+

+```

+embeddings {

+ tensor_name: 'word_embedding'

+ metadata_path: '$LOG_DIR/metadata.tsv'

+}

+```

+

+The same config can be produced programmatically using the following code snippet:

+

+```python

+from tensorflow.contrib.tensorboard.plugins import projector

+

+# Create randomly initialized embedding weights which will be trained.

+vocabulary_size = 10000

+embedding_size = 200

+embedding_var = tf.get_variable('word_embedding', [vocabulary_size, embedding_size])

+

+# Format: tensorflow/tensorboard/plugins/projector/projector_config.proto

+config = projector.ProjectorConfig()

+

+# You can add multiple embeddings. Here we add only one.

+embedding = config.embeddings.add()

+embedding.tensor_name = embedding_var.name

+# Link this tensor to its metadata file (e.g. labels).

+embedding.metadata_path = os.path.join(LOG_DIR, 'metadata.tsv')

+

+# Use the same LOG_DIR where you stored your checkpoint.

+summary_writer = tf.summary.FileWriter(LOG_DIR)

+

+# The next line writes a projector_config.pbtxt in the LOG_DIR. TensorBoard will

+# read this file during startup.

+projector.visualize_embeddings(summary_writer, config)

+```

+

+After running your model and training your embeddings, run TensorBoard and point

+it to the LOG_DIR of the job.

+

+```python

+tensorboard --logdir=LOG_DIR

+```

+

+Then click on the *Embeddings* tab on the top pane

+and select the appropriate run (if there are more than one run).

+

+

+### Metadata

+Usually embeddings have metadata associated with it (e.g. labels, images). The

+metadata should be stored in a separate file outside of the model checkpoint

+since the metadata is not a trainable parameter of the model. The format should

+be a [TSV file](https://en.wikipedia.org/wiki/Tab-separated_values)

+(tab characters shown in red) with the first line containing column headers

+(shown in bold) and subsequent lines contain the metadata values:

+

+

+Word\tFrequency

+ Airplane\t345

+ Car\t241

+ ...

+

+

+There is no explicit key shared with the main data file; instead, the order in

+the metadata file is assumed to match the order in the embedding tensor. In

+other words, the first line is the header information and the (i+1)-th line in

+the metadata file corresponds to the i-th row of the embedding tensor stored in

+the checkpoint.

+

+Note: If the TSV metadata file has only a single column, then we don’t expect a

+header row, and assume each row is the label of the embedding. We include this

+exception because it matches the commonly-used "vocab file" format.

+

+### Images

+If you have images associated with your embeddings, you will need to

+produce a single image consisting of small thumbnails of each data point.

+This is known as the

+[sprite image](https://www.google.com/webhp#q=what+is+a+sprite+image).

+The sprite should have the same number of rows and columns with thumbnails

+stored in row-first order: the first data point placed in the top left and the

+last data point in the bottom right:

+

+| 0 | +1 | +2 | +

| 3 | +4 | +5 | +

| 6 | +7 | ++ |

+  +

+ |

+

+  +

+ |

+

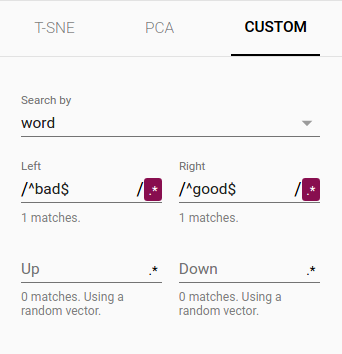

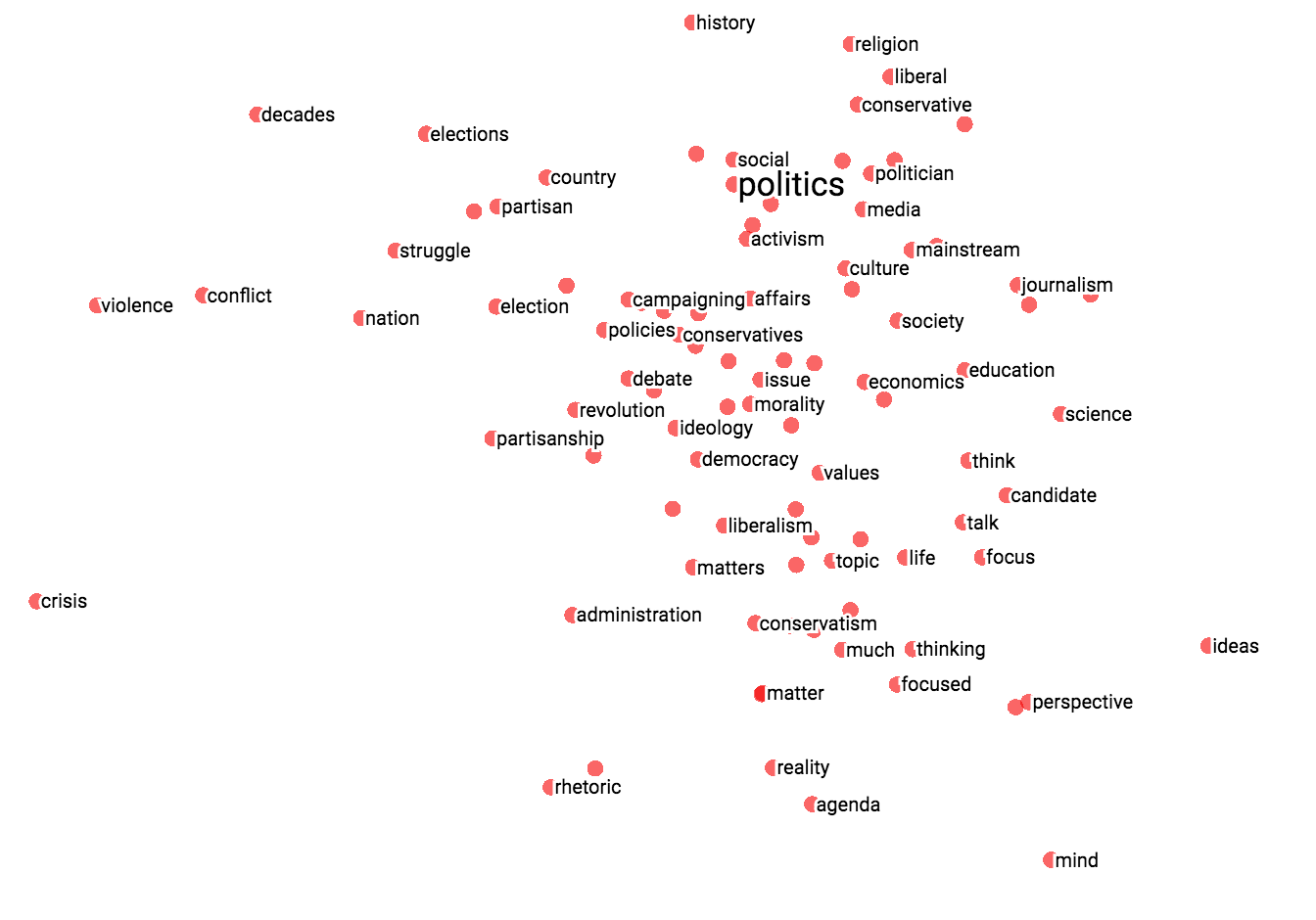

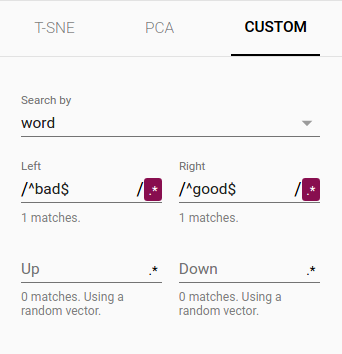

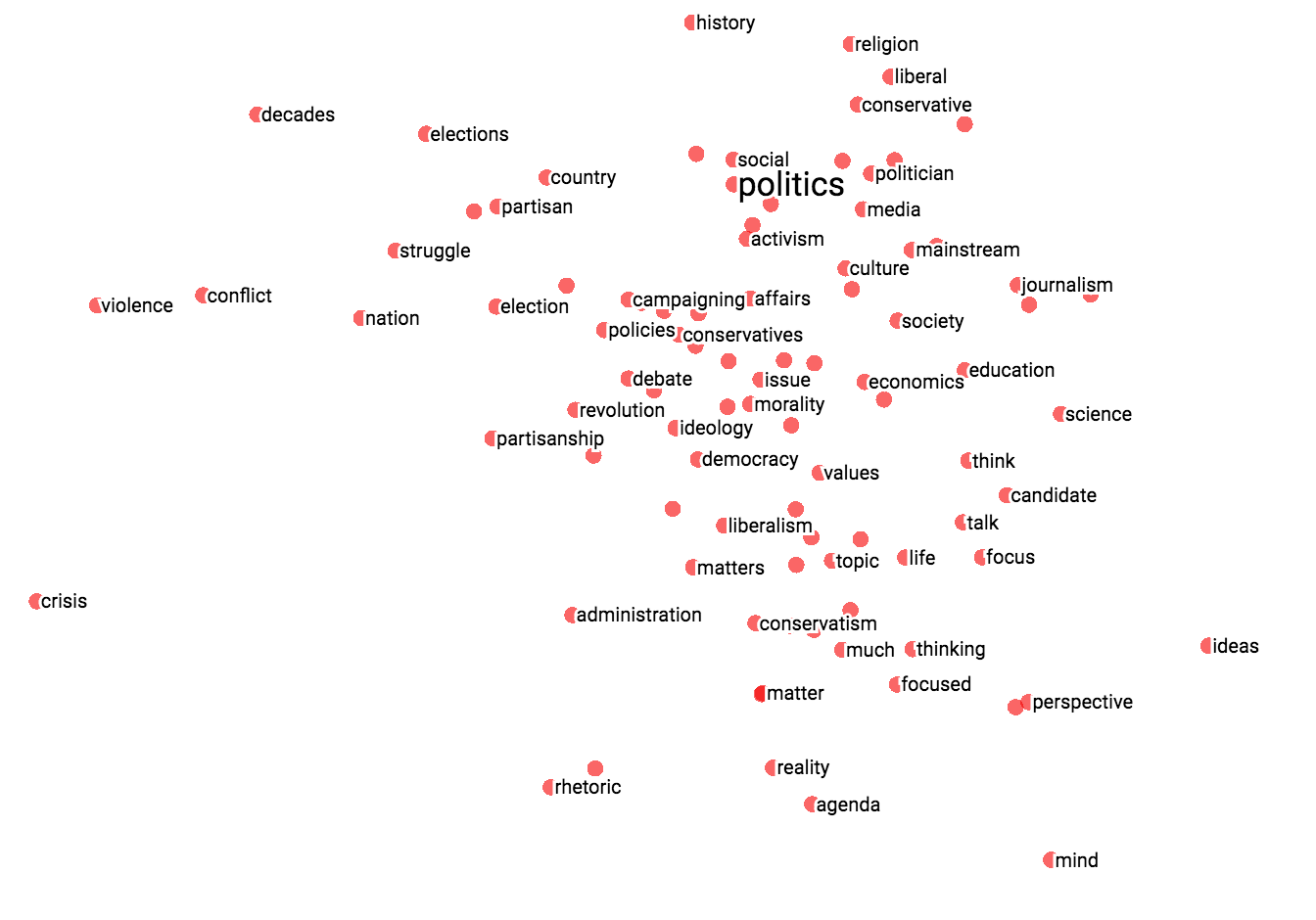

| + Custom projection controls. + | ++ Custom projection of neighbors of "politics" onto "best" - "worst" vector. + | +

+

+

+## Mini-FAQ

+

+**Is "embedding" an action or a thing?**

+Both. People talk about embedding words in a vector space (action) and about

+producing word embeddings (things). Common to both is the notion of embedding

+as a mapping from discrete objects to vectors. Creating or applying that

+mapping is an action, but the mapping itself is a thing.

+

+**Are embeddings high-dimensional or low-dimensional?**

+It depends. A 300-dimensional vector space of words and phrases, for instance,

+is often called low-dimensional (and dense) when compared to the millions of

+words and phrases it can contain. But mathematically it is high-dimensional,

+displaying many properties that are dramatically different from what our human

+intuition has learned about 2- and 3-dimensional spaces.

+

+**Is an embedding the same as an embedding layer?**

+No; an embedding layer is a part of neural network, but an embedding is a more

+general concept.

+

+

+## Mini-FAQ

+

+**Is "embedding" an action or a thing?**

+Both. People talk about embedding words in a vector space (action) and about

+producing word embeddings (things). Common to both is the notion of embedding

+as a mapping from discrete objects to vectors. Creating or applying that

+mapping is an action, but the mapping itself is a thing.

+

+**Are embeddings high-dimensional or low-dimensional?**

+It depends. A 300-dimensional vector space of words and phrases, for instance,

+is often called low-dimensional (and dense) when compared to the millions of

+words and phrases it can contain. But mathematically it is high-dimensional,

+displaying many properties that are dramatically different from what our human

+intuition has learned about 2- and 3-dimensional spaces.

+

+**Is an embedding the same as an embedding layer?**

+No; an embedding layer is a part of neural network, but an embedding is a more

+general concept.